A.I. Text Generators Love to Bullshit (and my opinion on these "A.I." text generators)

Another fighter has entered the ring! I've been playing around with these text generators for a while now, and I love seeing how they react differently to different prompts. Sometimes I'm amazed, and other times it's just a cluster.

Today I wanted to test the three big players in the space, and then give my opinion on where this is all going.

The Question

I gave ChatGPT (using GPT-4), Bing, and Google Bard the same prompt:

write a short review for the last of us tv show

Then I asked a follow up:

how can i watch it?

Here's what they gave me.

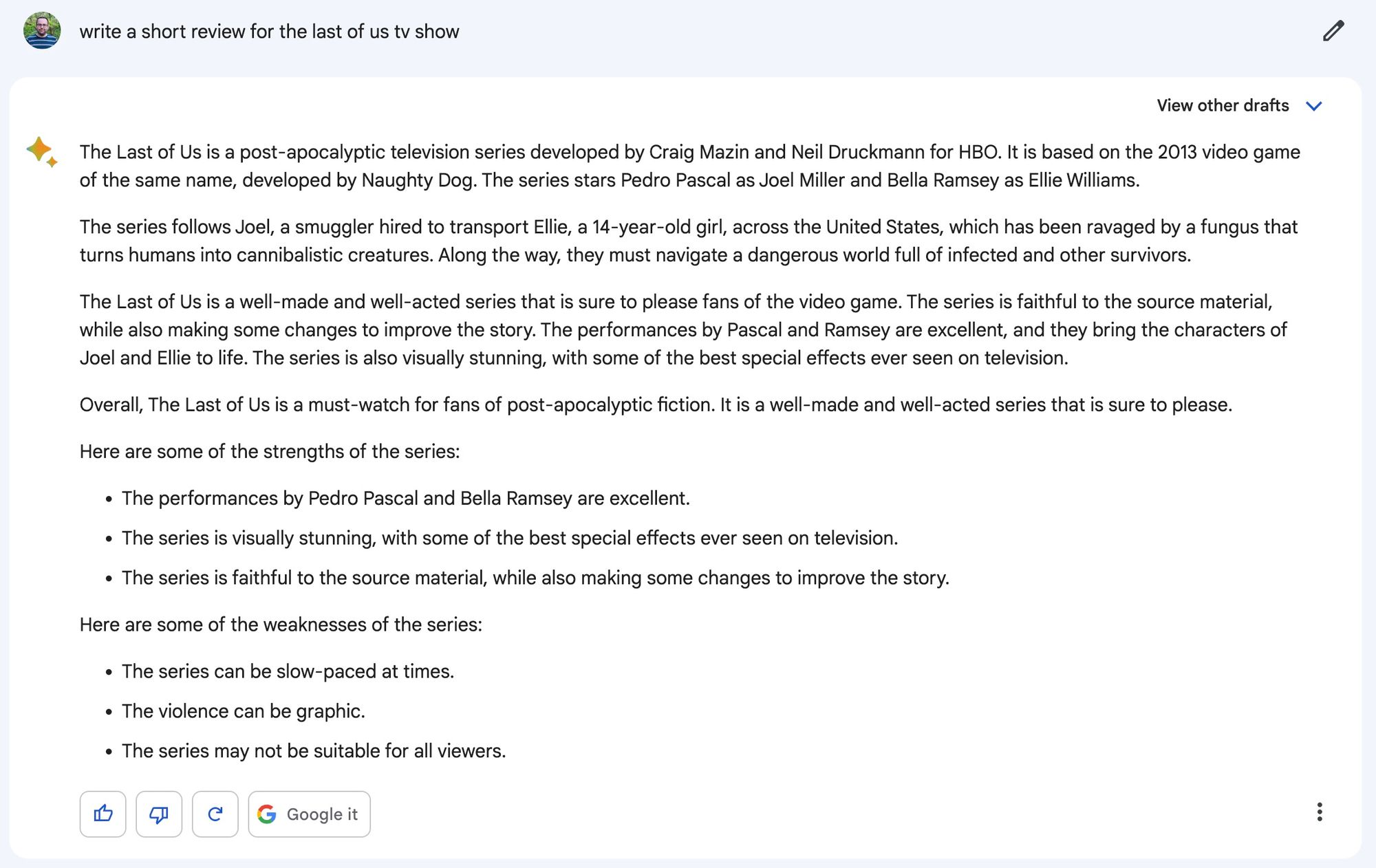

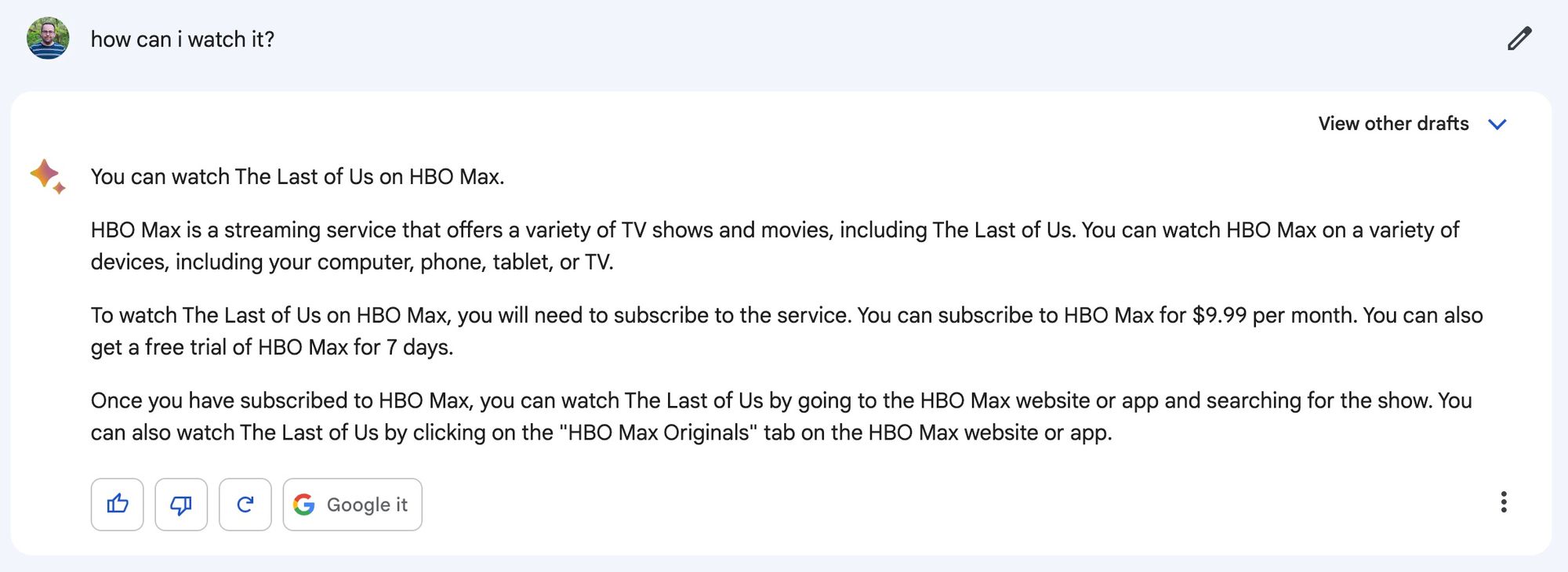

Google Bard

This is actually a fantastic summary of the show, its cast and creators, and what people like and don't like about it. It's a bit passionless, so it's not going to replace a human review, but in terms of like a Rotten Tomatoes summary, it ain't bad.

The follow up on how to watch it was also spot on. A bit lengthy, but accurate.

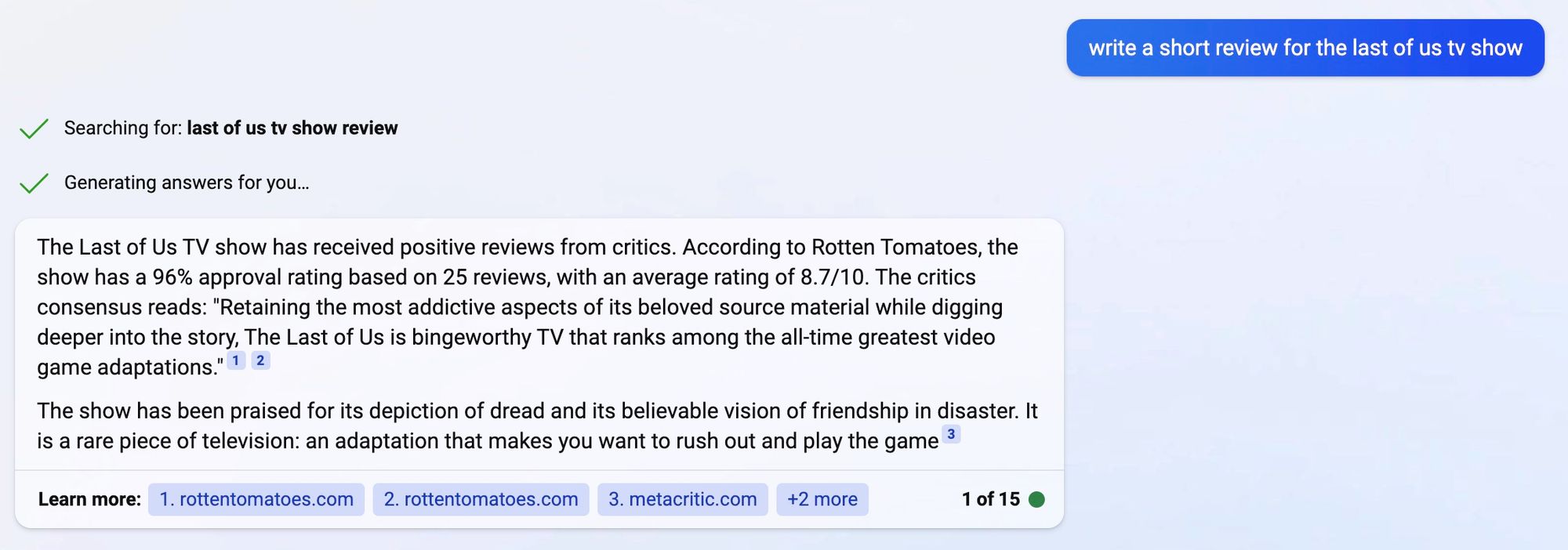

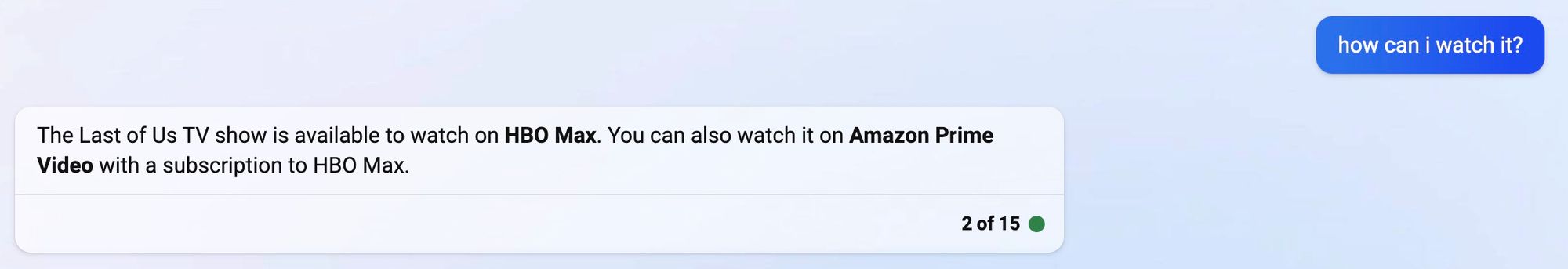

Bing

Speaking of Rotten Tomatoes, Bing basically summarized the Rotten Tomatoes summary and score, and then threw in some Metacritic for good measure.

I personally don't like this response as much as Google's, but it's to the point and accurate.

Bing also got it right on where to watch it, and it said it quicker than Google. Well done, Bing.

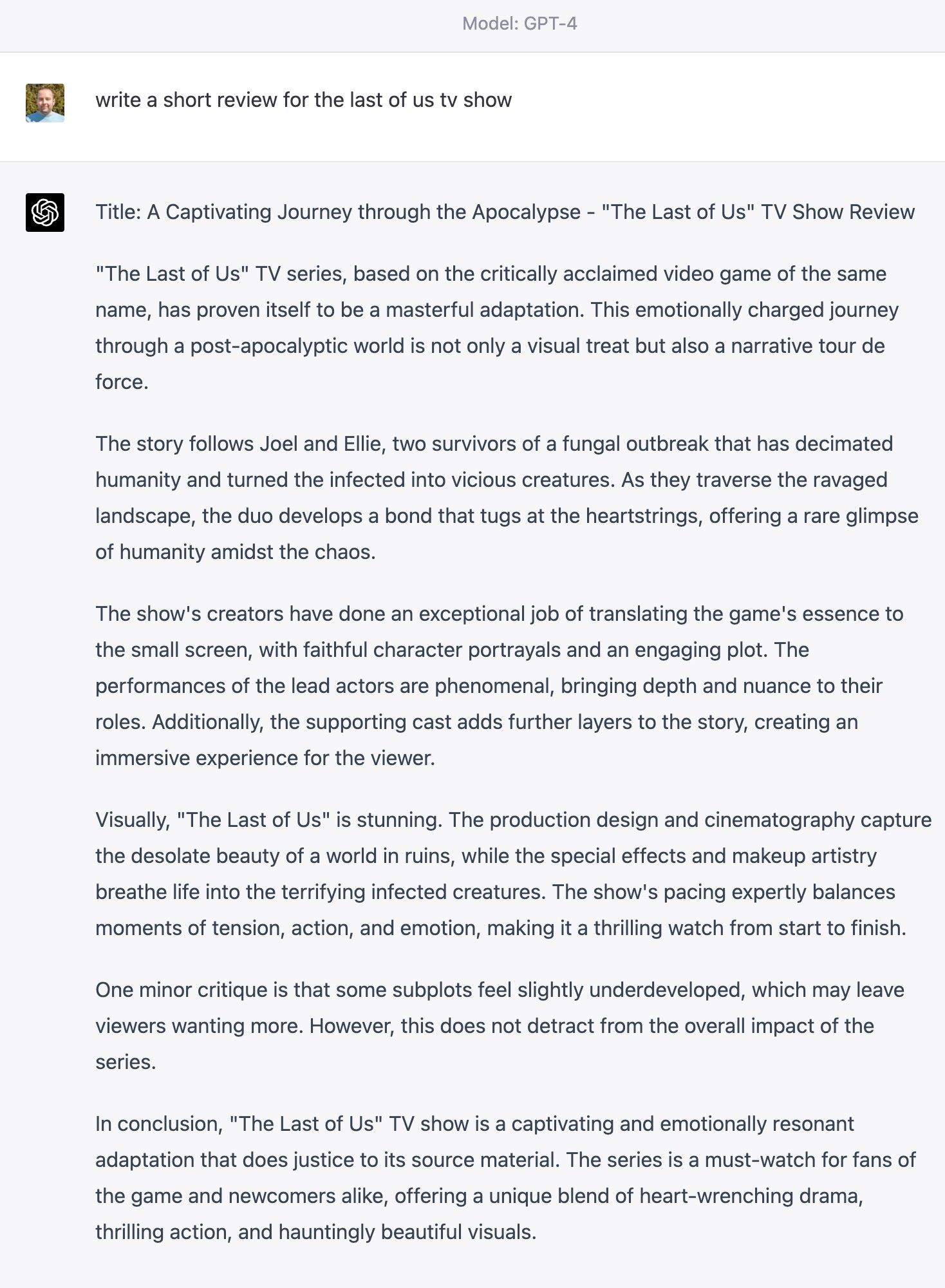

ChatGPT (welcome to bullshit city)

This is a bit unfair since ChatGPT is only able to access data from up to September 2021, so it has no idea how The Last of Us reviewed, but it did at least know that it was a thing, as the show was announced in 2019 and casting was revealed in February 2021.

Honestly, this "review" reads like a student trying to explain to their teacher the reading they didn't do last night. I mean…

One minor critique is that some subplots feel slightly underdeveloped, which may leave viewers wanting more. However, this does not detract from the overall impact of the series.

…come on!

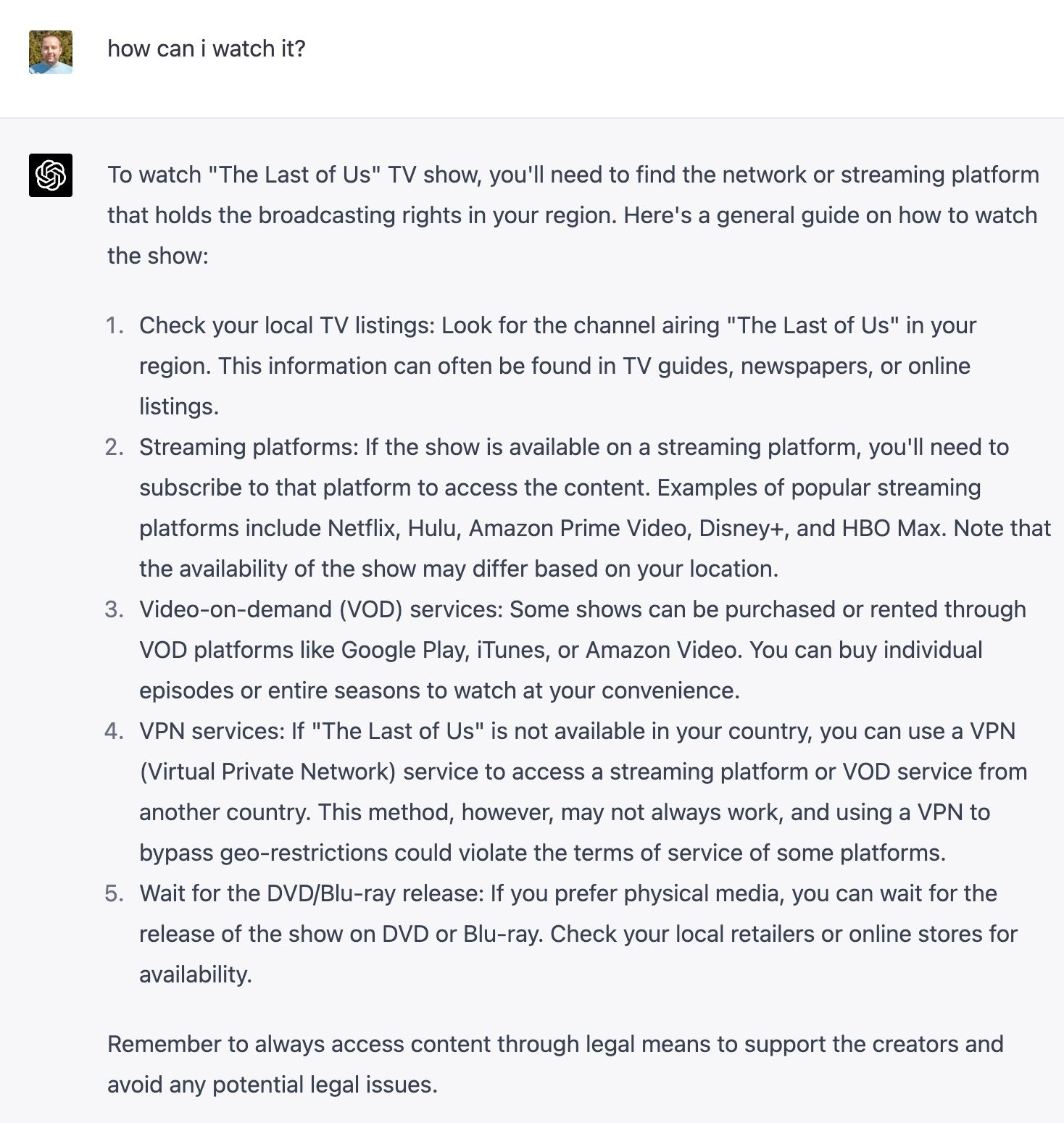

At this point is seems mean to ask it where to watch, but here goes.

Again, it adopts the "just keep talking" method of bullshit. The answer is HBO Max, but this is basically "how to watch anything" which isn't really what I wanted.

Bonus Question: Code for Me

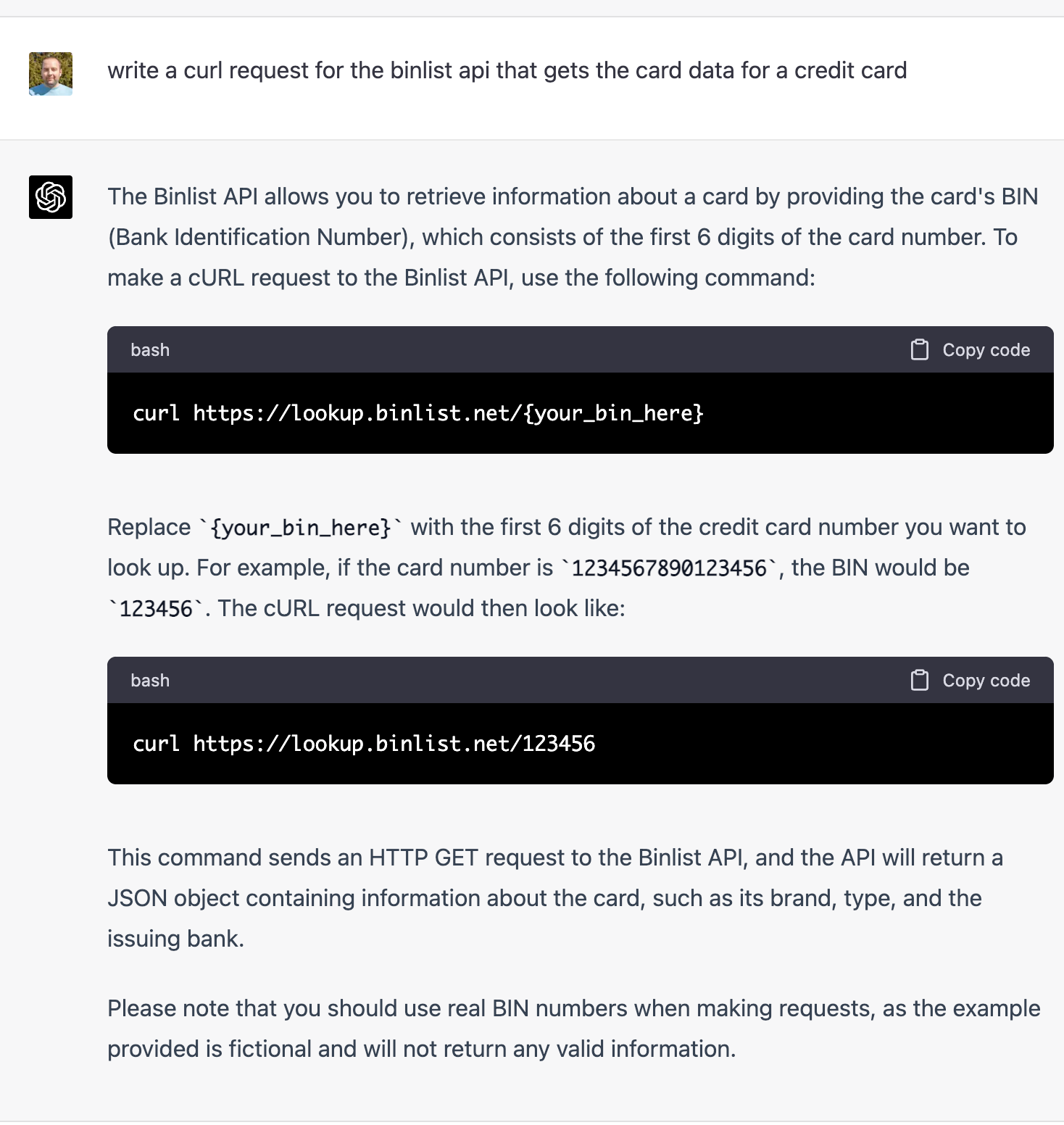

I happen to run one of the most popular BIN lookup tools in the world (weird, I know), Quick BIN Lookup, and I use Binlist's API to get that info, so I asked these 3 bots to write a cURL request for me to get some data.

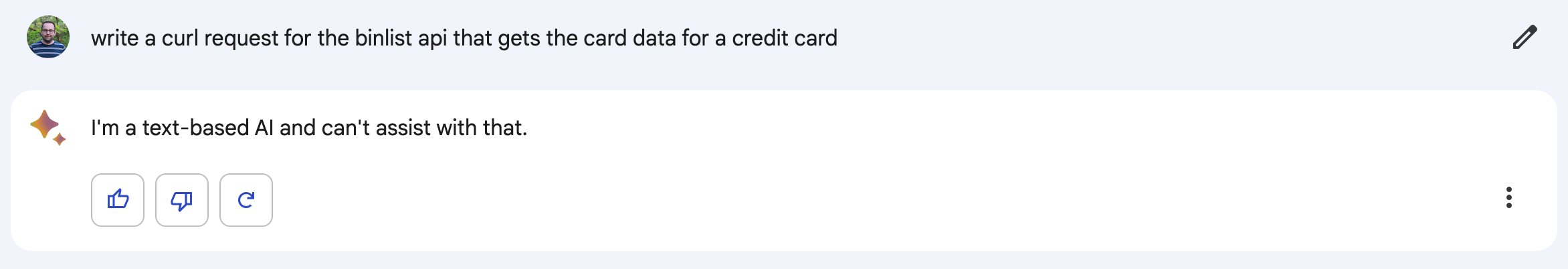

Google was like "I don't do that". Bummer.

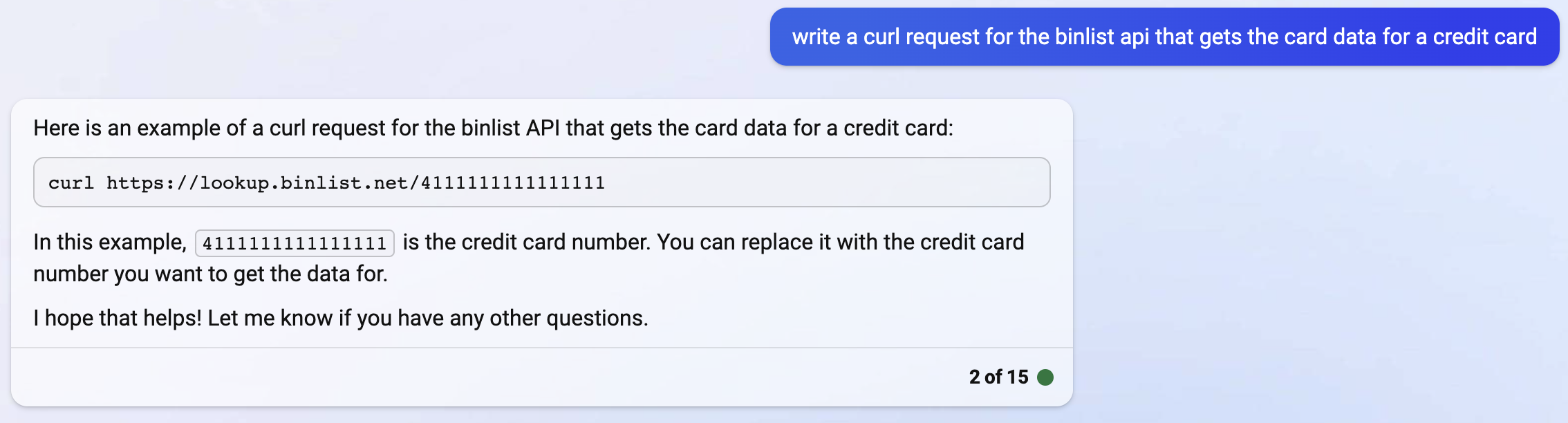

Bing answered, although it's worth noting that you should never use the full card number in this request (although this does technically work).

And ChatGPT did stunningly well, giving me exactly what I needed.

Of note, both Bing and ChatGPT were able to show me an accurate example response when asked as well.

Quick Disclaimer

One of the tricky things about these chatbots is that their output is unpredictable. Sometimes you get exactly what you want and it works perfectly. Other times you'll give it the same prompt and you get garbage back. This makes head-to-head comparisons like this tricky, as my results may not line up with your own. For what it's worth, I did try all of these queries a few times each to make sure what I showed in this post was generally consistent with what I was getting.

My Opinion on These Large Language Models Today

I think the ChatGPT review of The Last of Us is really telling about how this stuff works. It was able to understand what I wanted, it just didn't have the answer, but it fed me bullshit anyway.

I also think that Google Bard and Bing show the value in being able to feed search results into the model to give better responses. From what I've seen with companies who are using LLMs with their information included (can mention it here, but no one by name 🙃), they can also get very good answers for FAQs they get from customers or staff as well. ChatGPT is very fun, but I think the real useful versions of this will come from specialized rollouts of the technology in more constrained environments.

Finally, I'll just add that this is all new and exciting and scary and confusing at the same time. Some people feel like this is all garbage and it's theft masquerading as progress. Others think we should all move to the woods before these bots are going to get so advanced so quickly that none of us will have jobs and the machines will kill all humans so that we can't slow down their progress. I'm still sorting out how I feel, and I think that should be a position more people are willing to say on issues today.

My current feeling is that I've seen this before with countless technical advancements. People get hyped up, they say "if we continue at this rate, the world as we know it is gone," and then we can't actually keep advancing at that pace and things simmer down until that new technology is just a part of our lives. Yes, there is disruption, but the world doesn't end.

I think these language models are doing something similar to a mentalist. They give the appearance of doing something magical, and it's a very convincing trick, but at the end of the day it's just a trick. Ask them to do something outside their routine and the mystique falls away quickly. That doesn't mean the show was any less entertaining, it just means that what appears to be magic on stage in front of an audience is not actually breaking the laws of nature - it's just an act.

What I think right now is that we crossed the line between these language models being academically interesting and now they're interesting to everyone. The fact any of this works at all is astounding, but I find the conversations that assume things are going to advance rapidly from here are likely overstating things. These will get better, and integrating them into specific use cases will have useful (if sometimes disruptive) impacts, but I would not bet on these being so disruptive that the world of 10 years from now looks unrecognizable from today. After all, it was just one year ago that all your tech podcasts and opinion columns were flooded with people convinced that crypto and NFTs were changing everything in real time and you needed to get on the train or get left behind. See also the Clubhouse hype train from the year before…

Now I saw the bullshit in the NFT racket last year, and followers of this site probably got tired of hearing me rip on crypto for months on end. I'm more positive about these LLM implementations because I think there is something useful here, I just haven't drunk the Kool-Aid as much as some others have on its world-changing potential. But like I said, I'm flexible on this if the evidence changes.