You can’t just trust everything you read…

This morning I’m watching Candyman from 1992 and there’s a joke about how you can’t just trust everything you read in the newspaper.

I grew up in the 2000s and was keenly aware that you couldn’t just trust everything you read online.

It’s 2025 and people say you can’t just trust everything LLMs output.

LLM critics bring up the fact you can't just trust every single thing LLMs spit out as if it's a gotcha that makes the whole endeavor null and void, as if this hasn't been the case of every medium ever.

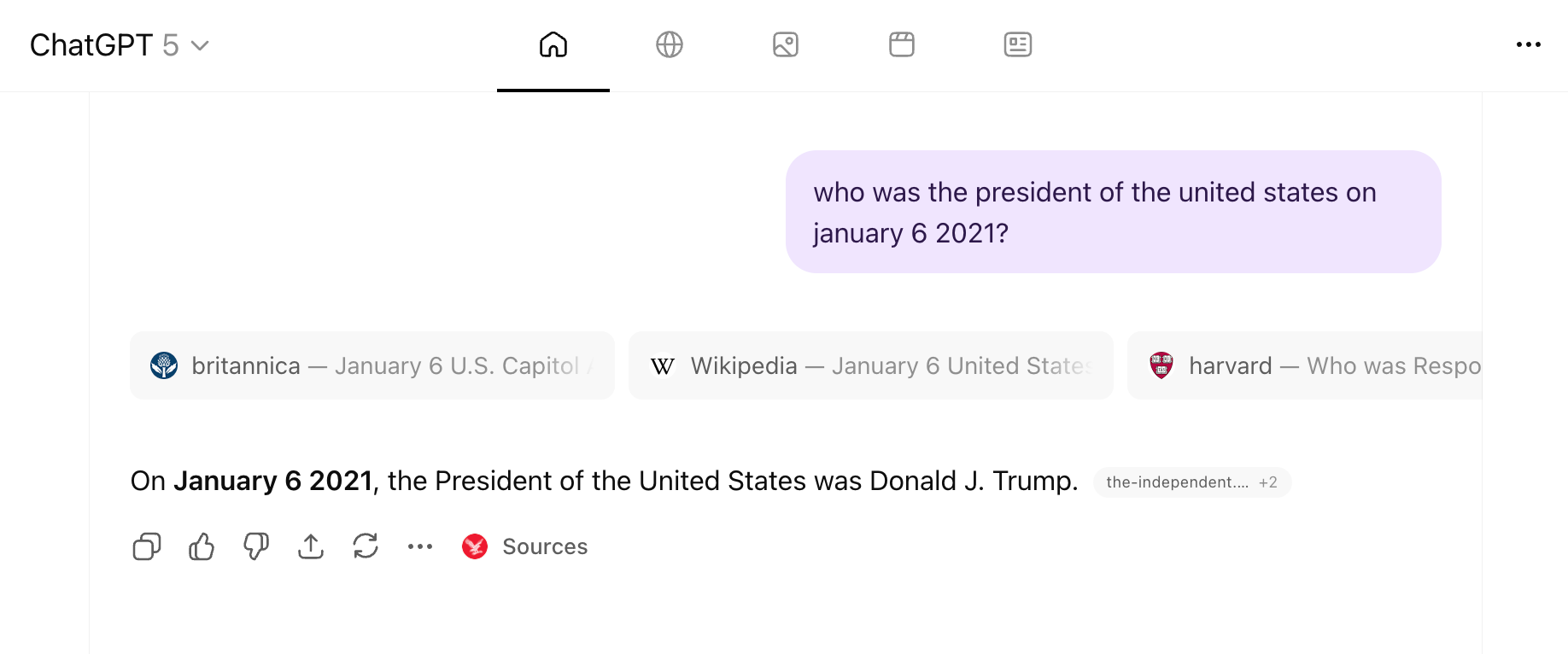

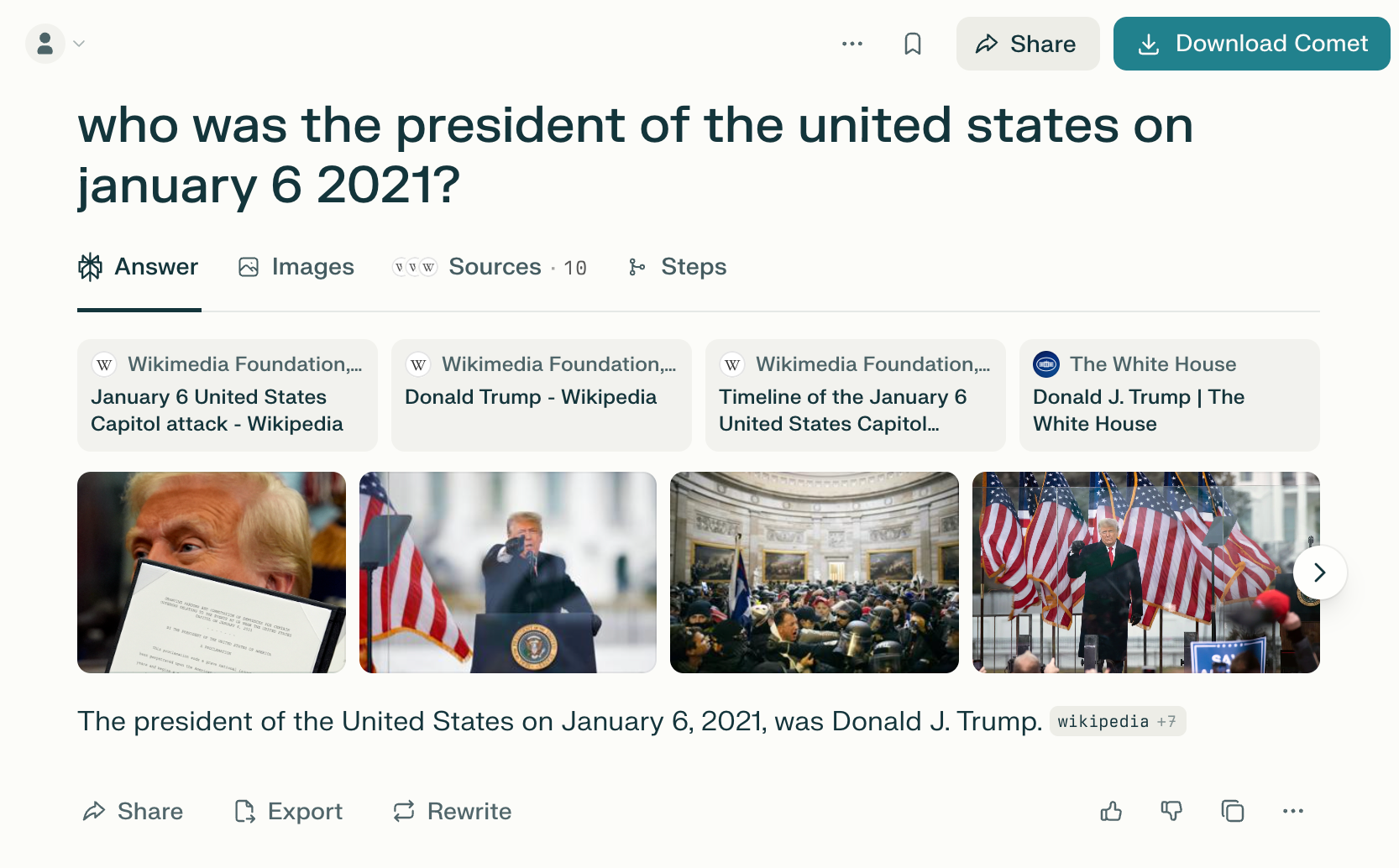

Obviously, the fewer mistakes LLMs make, the better, and there has been a meaningful improvement in this area since ChatGPT launched. As the critics are keen to point out, these models don't "know" anything, so it's critical that we humans make a real effort to improve their accuracy. It's why I'm encouraged by tools like ChatGPT inside their new Atlas browser as well as Perplexity's search features, both of which perform web searches on factual questions and prominently cite those results so you can check them.

I'm just saying "you can't just trust everything you read…" has always been true, it's just that the end of that statement can have any written medium appended to it. Don't believe me? Well, I guess you can't just trust everything you read on a personal, 100% human-written blog either. 😉