Another study on LLM energy use

This study was linked to by The Verge today, and as someone who keeps looking at the energy costs of AI wondering why he’s missing something, one of the opening lines in the study’s executive summary really caught my eye:

Electricity consumption from data centres, artificial intelligence (AI) and the cryptocurrency sector could double by 2026.

Okay, that sounds like a big deal, but looking at numbers for a while makes a few things in this one sentence perk my ears up:

- Data centers for AI, crypto, and everything else are bundled together.

- How much energy do data centers worldwide use today?

Thankfully, the summary addresses the second question immediately:

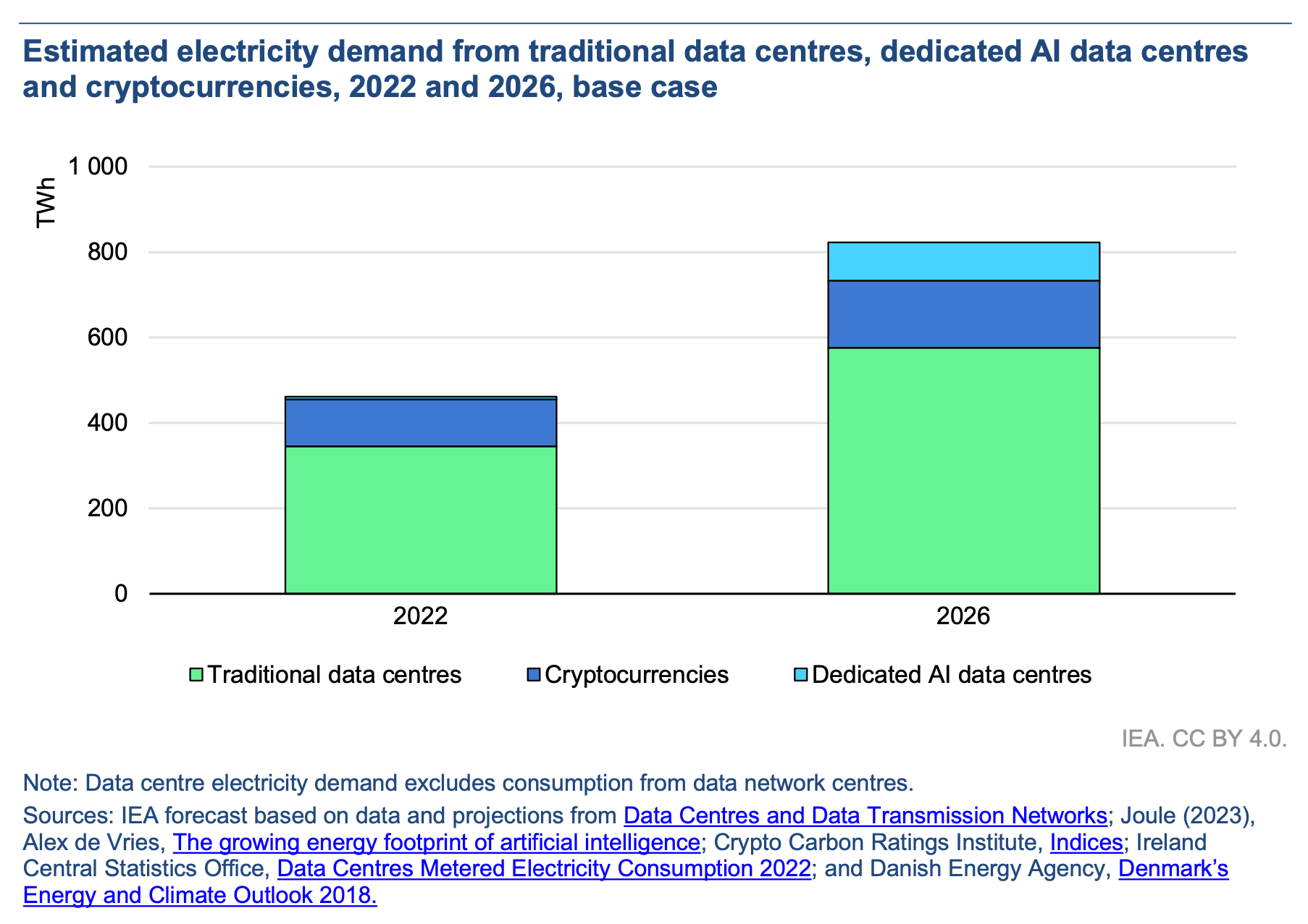

Data centres are significant drivers of growth in electricity demand in many regions. After globally consuming an estimated 460 terawatt-hours (TWh) in 2022, data centres’ total electricity consumption could reach more than 1 000 TWh in 2026. This demand is roughly equivalent to the electricity consumption of Japan.

Okay, so currently data centers worldwide use about half as much energy as all of Japan (unclear if their data centers are double-counted, but probably not a huge difference either way) and if it doubles then it’ll be as much as Japan. The next obvious question is how much energy Japan uses. This study looks at national energy consumption and this Wikipedia article summarizes the data nicely. Long story short, Japan uses around 3.7% of the world’s electricity. That’s absolutely a lot, even if you consider how much of the world operates on things in data centers. This gets less of an issue as we move more and more to clean energy sources, but those are estimated to account for about 30% of worldwide energy creation today, so we’re not at my dream state of having so much clean energy that we don’t even worry about energy consumption anymore.

But I’m a loon who wanted to know more about that combination of crypto, AI, and everything else, so I downloaded the 170 page report and started reading.

On page 15:

Still, emerging market countries recorded strong growth in electricity demand. By contrast, most advanced economies posted declines amid the lacklustre macroeconomic environment as well as the weak industry and manufacturing sectors, despite continued electrification. Milder weather compared to the previous year also exerted downward pressure on electricity consumption in some regions, including the United States.

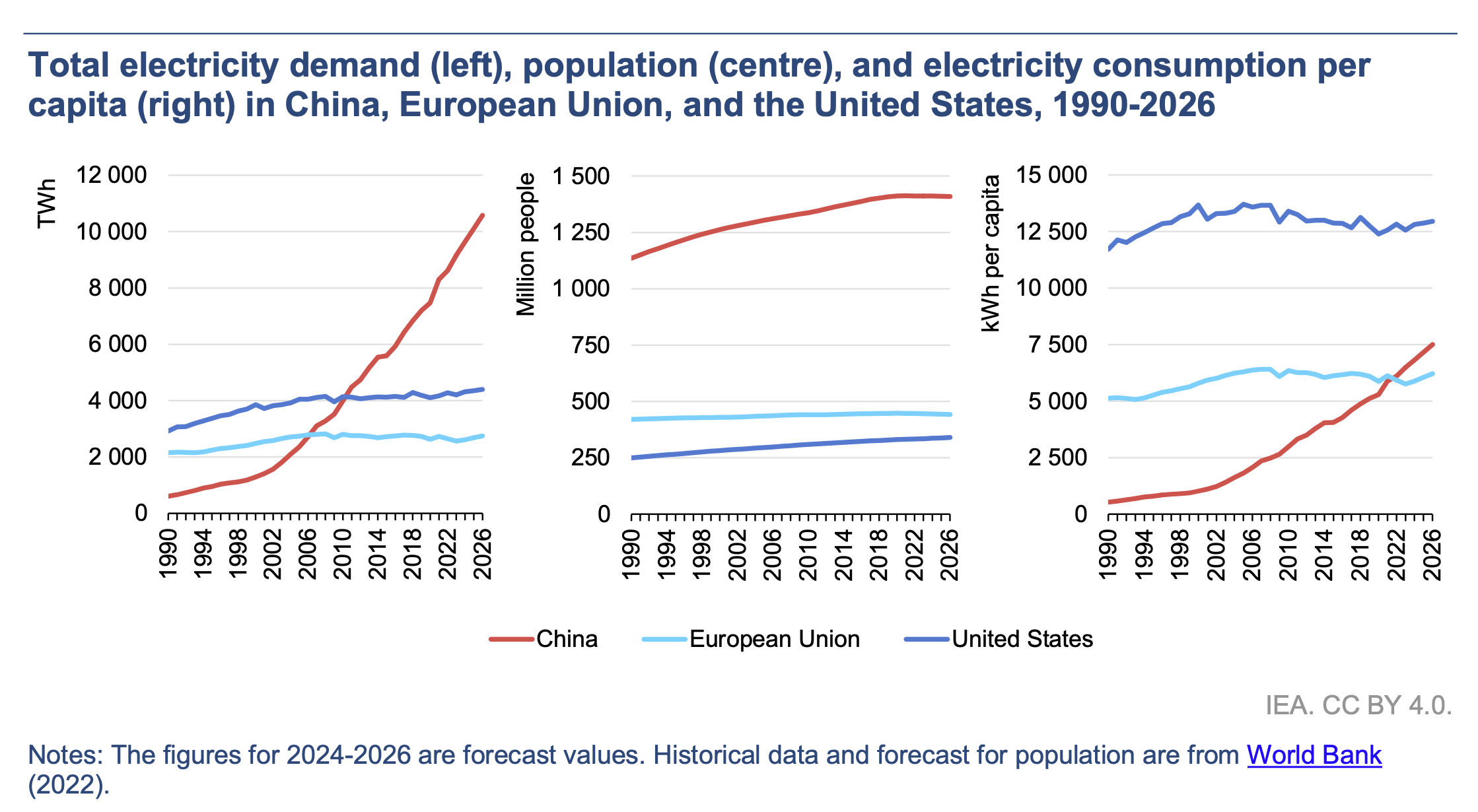

Overall energy use increased by 2.2% in 2023 compared to 2022, with the largest nations decreasing in usage while emerging markets brought the total up. China, which I guess counts as an emerging market, accounts for nearly one third of all energy use in the world, and they are where most of the energy increase is coming from. It is actually crazy to look at their energy use over the past 35 years compared the US and EU. From their report:

But then we got to the bit that explained everything:

Search tools like Google could see a tenfold increase of their electricity demand in the case of fully implementing AI in it. When comparing the average electricity demand of a typical Google search (0.3 Wh of electricity) to OpenAI’s ChatGPT (2.9 Wh per request), and considering 9 billion searches daily, this would require almost 10 TWh of additional electricity in a year.

Okay, so there it is, this is where the 10x number largely comes from. If Google did a full LLM request on every single Google search, then that would 10x the amount of electricity used since as we all know, LLM requests use 10x as much power as a Google search.

Except, as I wrote one week ago today, that ChatGPT energy consumption estimate may have been inflated by about 10x. From that study:

We find that typical ChatGPT queries using GPT-4o likely consume roughly 0.3 watt-hours, which is ten times less than the older estimate. This difference comes from more efficient models and hardware compared to early 2023, and an overly pessimistic estimate of token counts in the original estimate.

Also I didn’t mention it in that article, but the “LLM requests use 10x as much energy as a Google search” claim assumed each query a user was entering into ChatGPT was 1,500 words long, which would make the processing far more significant than the typical string most people enter into ChatGPT or Google. For reference, we’re around word 733 in this blog post, so not even halfway to the average request length needed to hit that 10x number everyone cites.

Will Google do a full LLM search on all queries it gets? Unlikely. And if it does, will each of those searches be essays written into the search box, will they be run on GPUs less efficient than the ones we’ve had for a few years now, and will they be on models that negate all the progress made in the last few years? Probably not.

But now let’s get to the crypto bit, since my understanding is that they use far more energy than LLMs:

In 2022, cryptocurrencies consumed about 110 TWh of electricity, accounting for 0.4% of the global annual electricity demand, as much as the Netherland’s total electricity consumption. In our base case, we anticipate that the electricity consumption of cryptocurrencies will increase by more than 40%, to around 160 TWh by 2026. […] Bitcoin is estimated to have consumed 120 TWh by 2023, contributing to a total cryptocurrency electricity demand of 130 TWh.

So that’s crypto, with about 130 TWh per year, almost all of which comes from Bitcoin. Now how about AI stuff?

AI electricity demand can be forecast more comprehensively based on the amount of AI servers that are estimated to be sold in the future and their rated power. The AI server market is currently dominated by tech firm NVIDIA, with an estimated 95% market share. In 2023, NVIDIA shipped 100,000 units that consume an average of 7.3 TWh of electricity annually. By 2026, the AI industry is expected to have grown exponentially to consume at least ten times its demand in 2023.

Again, this is running on some math and assumptions that I’m not convinced are accurate, but let’s take their case at face value – if AI computer usage goes up to ten times, it will still be less than half as energy intensive as crypto. We are in very big numbers territory now, and I definitely get that. If anything, this shows how much of a blight crypto is considering its fundamentally power-hungry nature and how few people use it regularly compared to AI tools that are about as mainstream as any software product in the world today. This chart shows the 10x growth of AI datacenter consumption, and something else actually stands out to me:

Traditional data center energy use is also massively increasing. In fact, take AI and crypto out of this, and you’ve already got a major story about increasing data center power use. The study reports reasons why more data centers are going up in places like California, Texas, Chine, Denmark, and Ireland are basically all down to low costs and tax incentives, but it doesn’t get into what these increases are powering. I guess it’s a bit of everything as we move more and more of the things we do online, but I’d definitely be interested to see more nitty gritty details on what the biggest factors are in this general substantial growth in data center power use.

Where does that get us?

So where does that leave us as we get to word 1,263 in this post (besides not even to the average LLM prompt in that study that everyone uses for measuring AI power draw 🙃)? Let’s talk perspective again: if this study’s 10x AI usage prediction is correct and combined data center usage hits about 820 TWh as they expect, that amounts to about 2.7% of all energy use in the world powering data centers. Is that a lot or a little? It depends on your perspective, of course. When I think about how much of our lives is managed or full-on lives in the cloud, then 2.7% doesn’t seem insane. However, when I think about that as more energy than Brazil uses every year, it sounds like a ton.

Then I get to page 62 in the report where they look at emissions, and we actually see reductions in emissions from the 3 biggest energy consumers in the world (China, the US, and the EU) with a forecasted worldwide drop in emissions of 3.5% from 2023 to 2026, and I can’t help but feel a little optimistic again.

I guess my hope is that we continue to move to more zero-to-low emission energy sources like we have been for a few decades and that we continue to optimize LLMs to be useful but also less costly than they are today.

And there we go, exactly 1,500 words, like the average ChatGPT prompt 😉