Fake frames?

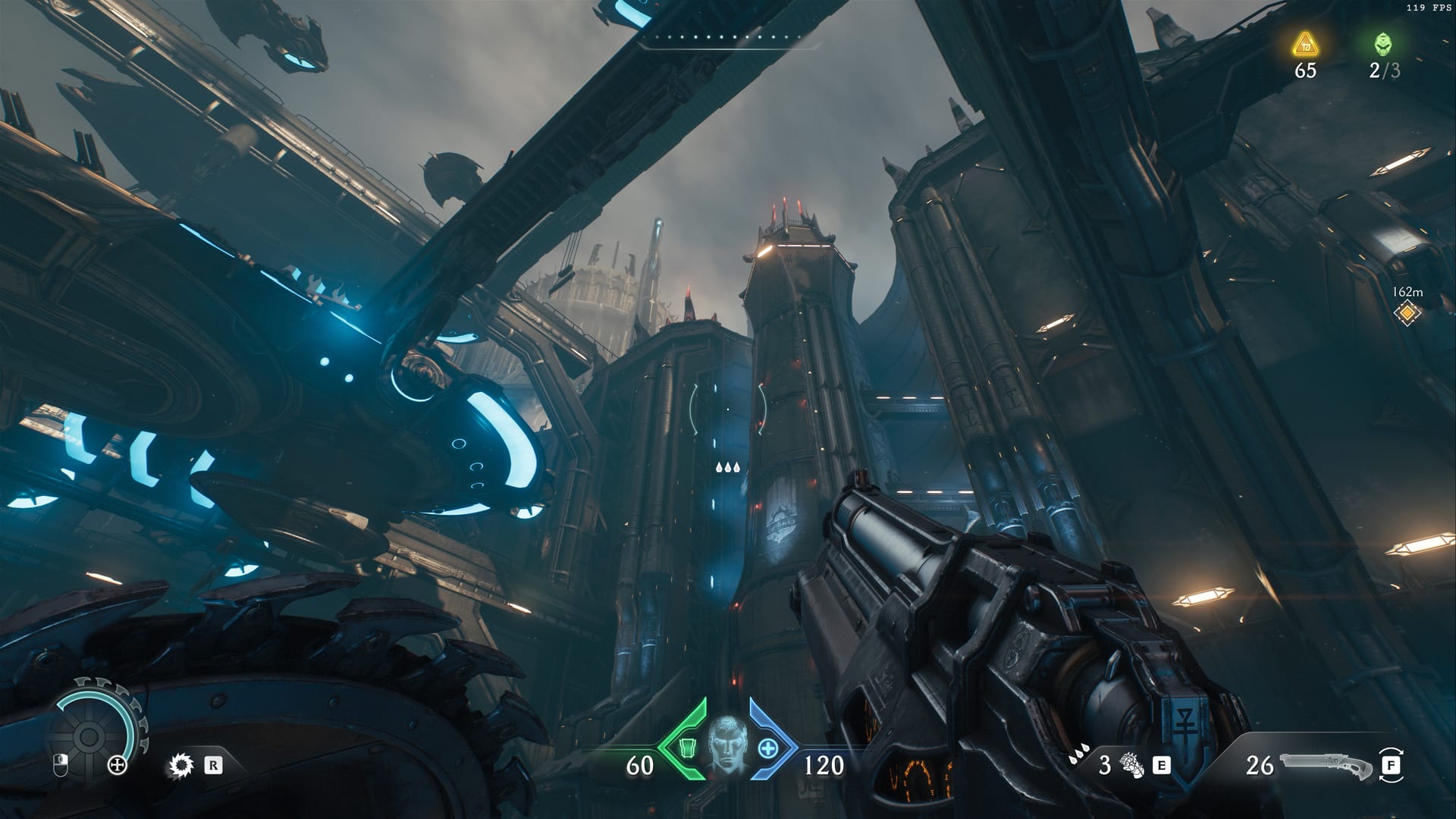

At the top of this post there is a screenshot I took from Doom The Dark Ages, which I played on PC. Looks pretty great, right!?

Wrong!

As some in the video game community would say, this is a “fake frame” from the game. Why? Well, the screenshot is 4k, but I was playing with DLSS enabled, so this is actually more like a 1440p (or maybe even 1080p) frame that was upscaled in real time to 4k. Also, I was using frame generation which doubled my frame rate, so there’s a 50% chance this frame technically wasn’t even rasterized at all by the GPU. Here’s a few more screenshots, all of which could be “fake frames” so the odds are quite high that at least one of these is a dirty fake frame.

I'm of course being a bit sarcastic, I think these screenshots looks amazing, and it looks even better in motion at 100-150 frames per second. If I was really intent on finding issues in the game's visuals, I probably could. There are probably odd moments here and there where things don't look absolutely perfect, and there are certainly examples of games that don't use the tech we've talked about to such great effect, but you could say both of those things about all video game tech, right? Screen space reflections are often nice, but you can easily see the major flaws if you look at all critically at them. Temporal anti-aliasing (TAA) is is similar, and the list goes on.

I haven't even mentioned the fact that all of these images were converted from uncompressed PNGs to highly compressed JPEG images, so they're even lower quality than what I saw in real time.

I guess what I'm saying is that there's a dogmatic view out there of how graphics rendering works, and that view seemingly has no space for any advancements in rendering tech beyond making traditional 2000's era rendering faster (the overlap of those who don't like DLSS or frame generation and those who don't like ray tracing seems quite significant). Obviously I think raw computational power should improve, in no small part because not every single game uses these more cutting edge technologies, but I don't think traditional advancements are all that matter. GPUs themselves were a major innovation that took work that used to be done one way (on the CPU) and radically changed how graphics were generated. Other tech such as LOD management and foveated rendering have been longer-running tricks to make an image look more crisp and clean that it would otherwise appear.

It's a dumb sounding thing to say, but I feel it must be said, every frame rendered to your screen is "fake". All of it is a computer doing a ton of math to put an array of colorful pixels on your display. The fact that some of those pixels are calculated with one piece of tech and others are rendered another way feels like something that is mostly academically relevant or for bar charts in comparisons that need to make things seem competitive or "apples-to-apples" even if those bars don't reflect new tech or how people actually use their GPUs. Even if you disagree with this opinion, I would simply counter with those (compressed) screenshots above, showing modern rendering technologies that allow me to play a gorgeous, modern game like Doom The Dark Ages (with fully ray traced lighting and reflections as well) at a frame rate and resolution that are truly outstanding. If these are "fake frames" then I don't care one bit.