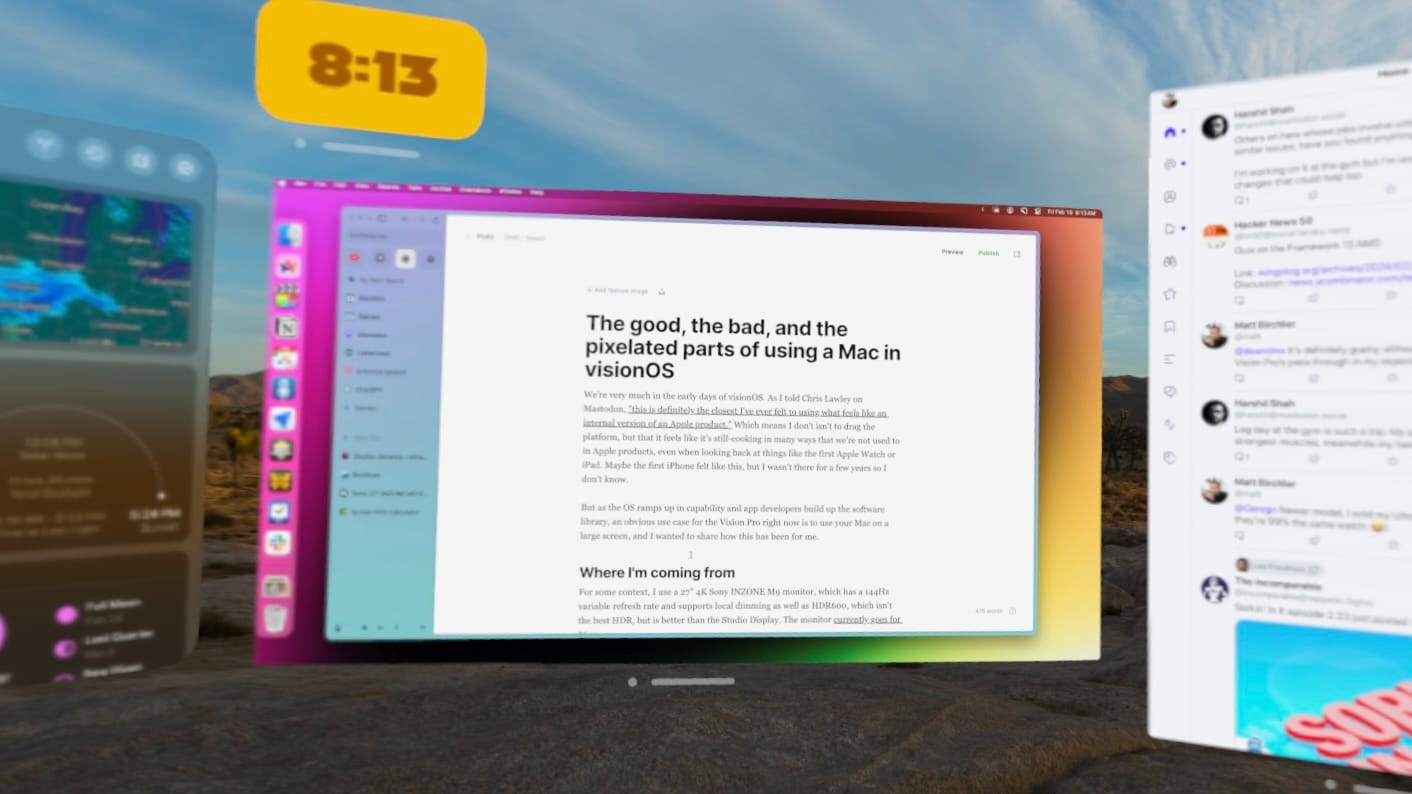

The good, the bad, and the pixelated parts of using a Mac in visionOS

We're very much in the early days of visionOS. As I told Chris Lawley on Mastodon, "this is definitely the closest I've ever felt to using what feels like an internal version of an Apple product." This isn't to drag the platform, but that it feels like it's still-cooking in many ways that we're not used to in Apple products, even when looking back at things like the first Apple Watch or iPad. Maybe the first iPhone felt like this, but I wasn't there for a few years so I don't know.

But as the OS ramps up in capability and app developers build up the software library, an obvious use case for the Vision Pro right now is to use your Mac on a large virtual screen, and I wanted to share how this has been for me.

Where I'm coming from

For some context, I use a 27" 4K Sony INZONE M9 monitor, which has a 144Hz variable refresh rate and supports local dimming as well as HDR600, which isn't the best HDR, but is better than the Studio Display, for what it's worth. The monitor currently goes for $699.

I only use the single monitor and genuinely dislike using a computer with multiple monitors. I also have no desire to get anything bigger than 27" right now, as the added space wouldn't be useful.

A quick tangent on a personal pet peeve

Rewind a few years to when the Studio Display released and there was a very frustrating conversation at the time about what "real Mac users" demand from a display. There were countless podcasts and blog posts about how a 4K 27" monitor may be okay for Windows bums, but wasn't what the discerning Mac user would ever accept in terms of clarity; it was 5K or bust.

It bugged me on several levels, not least of which was that the suggestion kept coming up that you couldn't do perfect pixel doubling of the UI at 4K and that there would always be some blurriness because of that. This drove me crazy because of course you can do pixel-perfect UI scaling, you just have to do it as an effective 1080p resolution. I get that some people don't want to do this if they're used to a 5K monitor, but it's not a massive difference and it leads to a wonderfully crisp image.

Also I sit about 24 inches from my monitor, and according to numerous "retina calculator" websites I've seen, that means my perfectly pixel-doubled 4K 27" screen is considered "retina" at 21 inches away.

But let's get back to using a Mac in a Vision Pro.

Clarity

I of course brought up this old bugaboo because while the Mac Virtual Display in the Vision Pro is the best I've seen personally, it's certainly not as crisp as using even my already substandard to some (😉) 4K monitor.

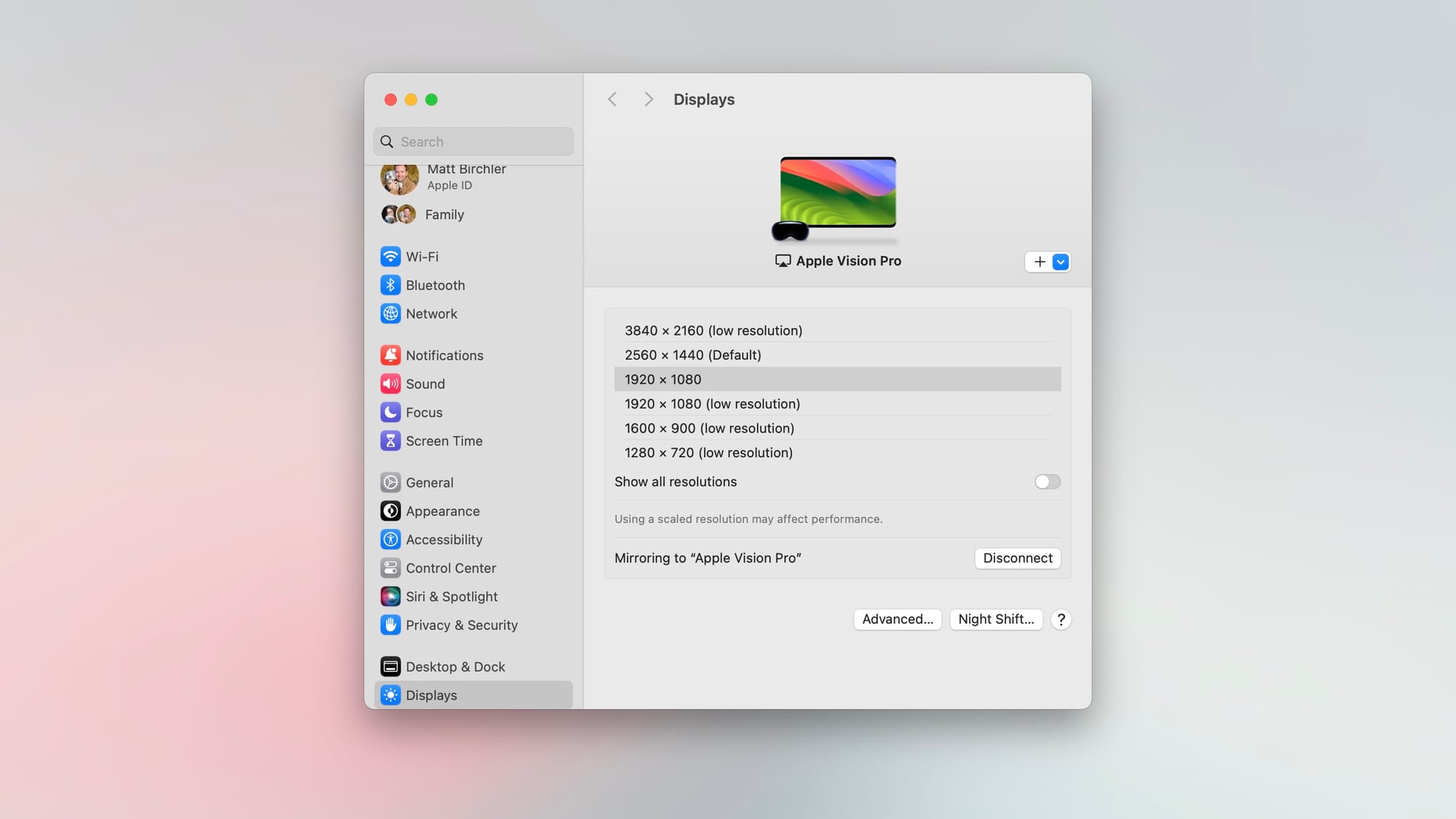

The "effective" resolution of the virtual screen defaults to 1440p just like it would on a 5K monitor, and it's doing some pixel doubling to output a theoretical 5K image. I actually found this made the UI too small to comfortably use, so just like on my Sony monitor, I dropped it down to 1080p with pixel doubling to 4K.

But the "resolution" of the display is not the best way to describe clarity in a headset. After all, the real world I'm in has effectively infinite "resolution" but doesn't look perfect in the headset, so what do we care about?

We care about points per degree of vision. In short this is how many pixels are in every degree of horizontal distance you perceive in the headset. In VR we don't care about PPI or DPI, we care about PPD. Using this measurement, the Vision Pro's display is about 34 PPD, which is higher than the Quest 3 (25 PPD) or the PlayStation VR2 (19 PPD), so major improvements there, but how does that compare to the effective PPD of a 4K monitor 24 inches from my face?

According to this site, my monitor has 74 PPD, or a little over twice that of the Vision Pro. For those of you with a 5K monitor, you're around 98 PPD at the same viewing distance. In fact, the 34 PPD of the Vision Pro is closest to that of a 1o80p 27" monitor when viewed from 24 inches.

Now of course, you can make the virtual Mac display much larger than your real life monitor, and that will allow you to see more of the 4K/5K image coming from your Mac, but then you run into issues with needing to move your head more to see everything on the screen. For me, that's annoying, but if you want a screen that occupies your entire field of view, you can do that. You're still only going to get 34 PPD, but more of that original image will be visible.

Environments

One of the key things I didn't really expect to value as much as I do are the digital environments, which are kind of excellent. They look absolutely fantastic, and the ability to have them take up whatever portion of my world I want is shockingly useful. I personally prefer the Joshua Tree environment, which is very serene and comes with some nice background noise. I wouldn't say I'm ever tricked into thinking I'm actually there, but it does make me focus a little better at times when I need it.

I tend to have the environment set to take up about 60% of my view, which covers everything in front of me, but leaves my keyboard and mouse visible. I'm kind of a touch typer, but sometimes I do need to look down and see my keyboard or coffee, so this mix works for me.

Companion apps

Another thing I find compelling about this feature is how it lets me float visionOS apps around my Mac's display. I'm still toying around with what makes sense, but I've found I like a few things:

- Things 3 which I can just glance at and pinch a task to mark it as complete

- Widgetsmith's time widget which simply lets me see the current time clearly

- Day Peek which shows a countdown to my next calendar event

- Music in its mini player window

- Carrot Weather on days where it's looking dicy outside

- Ivory when I just want to see a feed of the day's goings on at a glance

- Home UI which lets me make buttons that toggle HomeKit devices without needing to use Siri

I think this will evolve over time, and while many of these things can exist on the Mac as well, I do find myself enjoying having them floating in space quite a bit.

Limits

Beyond general clarity discussed above, there are a few limits to this mode that make it imperfect and I hope can be improved in time.

First is that audio doesn’t get routed to the virtual display, which in turn means you can’t hear it through the Vision Pro’s speakers. You can pair headphones with your Mac or you can play audio through your Mac’s speakers, but it’s not the same and I really hope they can add this in an update.

Next is an issue with inputs. Apple introduced a seamless way to move your mouse and keyboard focus from your Mac to an iPad a few years ago, but I never really understood the use case back then. I know some other people liked it, but it didn’t click for me. However, when your Mac is paired to your Vision Pro for Virtual Display, suddenly that handoff works magically as you shift your gaze from your virtual Mac screen to other apps open in visionOS. This means I can type on my Mac, look over at Ivory floating off to the side, and type a post from my mechanical keyboard seamlessly. It’s awesome when it works, but there are several things that make this a bit odd.

- While my keyboard can work in any visionOS app, my mouse does not. The Magic Trackpad can work and you will see an iPadOS-style cursor when you use that, but regular mice simply do not work at all.

- Sometimes I’m looking at something in one window, but want to type in another (like when I’m referencing someone else’s article), but visionOS’s eye-tracking means whatever I’m looking at gets focus, so I’ll end up typing in the wrong app.

- If you use a Magic Keyboard, visionOS will put the typing preview box right above the keyboard automatically. It seems to be doing this with ML vision that detects the keyboard in 3D space. Sadly, no other keyboards get this treatment, so the preview box goes somewhere seemingly random and I need to manually move it where I want. I’m hoping this can get improved in a visionOS update.

Finally, this is a me thing, but I can only create a virtual display of my personal Mac, not my work Mac. My work Mac is logged into iCloud with my work account, and there is simply no way to bring that into my Vision Pro. I understand that I shouldn’t be able to bring any random stranger’s Mac around me into my view, but I can easily use Virtual Display from my personal Mac to my work Mac, and I would like to be able to do this on the Vision Pro as well.

Some concerns about living in a headset

I also value living in a mix of the real and digital world. I know the idea of visionOS is that it lets you feel like you're in the real world, but I think the tech simply isn't there yet to make this fully convincing. I'm still staring at a screen, even if that screen is showing the world around me, and I'm still wearing a heavy headset that's fucking up my hair the whole time it's on my head. When I'm using a Mac, I'm not staring directly at the screen 100% of the time, I'm looking around my room or out the window, but in a headset, it's all screen all the time.

I wouldn't say I've had any real pain or side effects so far, but there are some things on my mind here.

The lens system in a VR headset like the Vision Pro lets screens that are physically millimeters from your eyes be optically several feet away. This is why you can see the Vision Pro displays clearly, even though if you held your iPhone that close to your face you would never be able to see that screen in focus. I believe the Vision Pro makes the displays effectively 4 feet or so in front of you, so you can focus comfortably. This is also why it's technically not hypocritical for Apple to add a (optional) warning to iOS for when you hold your phone too close to your face at the same time they announced a headset with screens even closer to your face.

But that's not to say it's all good. There's the vergence-accommodation conflict, which is a common issue with VR headsets. Despite simulating depth, you are still seeing everything the exact same distance from you, so your focus can go from something inches from your face to something a mile away and you eyes don't need to do anything to see both of them clearly. This kind of breaks our brains a bit since we've lived for hundreds of thousands or millions of years (depending when you start counting, at "homo" or at "sapiens") with our eyes needing to move certain ways to see our world. VR breaks that and it can cause fatigue, dryness, or more serious issues in people.

We have some issues with too much screen time on traditional screens as well, and the solution there is to take breaks and doing stuff like looking away into the distance on a regular basis to help with fatigue. A headset makes this harder since you can't look away from the screen unless you take the thing off (which also puts it to sleep). Again, I haven't experienced bas issues here, but I also haven't used my headset for a fully day of working from my Mac, and anecdotally, discomfort seems to be one of the reasons people are returning their Vision Pros, so your mileage may vary.

When would I use this?

Ultimately, this isn’t a feature I’m going to use all the time. If I’m at my desk, and especially if I‘m doing intense video or photo editing, I’m going to want the added clarity of my real monitor. I have edited a YouTube video from my Vision Pro and I’ve done some photo editing as well, but honestly the real display is a bit better at both. The added clarity and improved color depth add up to a nicer experience for what I do with a Mac.

But the ability to focus a bit better in a digital Joshua Tree and to have certain bits of data floating around me are also quite compelling, so there are times where I do actually use the headset at my desk even though I’m sacrificing clarity and color depth. I’m just over a week into using this thing at all, so it’s hard to see where my balance lands, but there’s definitely something here that’s pretty compelling.

The huge use case for me is going to be when I’m not at my desk, though. Like I said in my post about movies suddenly being something I can experience at the highest quality anywhere I want, now I can bring my big screen Mac experience more places as well. I don’t have to work all day at my desk, I could move to the living room or even go outside and work from the patio — my big screen would come with me. If I’m on the road either on vacation or a business trip, I’ll be able to bring this whole experience with me as well. I’m used to having a limited Mac experience on the road before, and this opens a lot of potential for a much better work experience wherever I am. I’m not saying this is worth the cost of admission on its own, but it’s pretty rad if you already have the damn thing.

Discussion