Critics vs "Real People": Rotten Tomatoes (and Letterboxd) Data Tells All

A thing I've seen crop up over the past few years has been people lamenting how film critics "just don't get it" when it comes to determining what actually is good. While I would contend that "good" and "popular" often get conflated, I wanted to look at the numbers to see a few things:

- Is there a dichotomy between how critics and regular people recommend movies?

- Has the dichotomy gotten worse recently?

- Unrelated, are movies getting longer?

Method

I went through the top box office hits from 2010 through 2021 and recorded each film's critical score and audience score on Rotten Tomatoes, as well as Letterboxd. I looked at the top 20 movies of 2010-2019, but only the top 10 in 2020 and 2021 since way fewer movies were released in theaters.

I also recorded the runtime of each film to see if the most popular movies are also getting longer.

All in, this was 220 movies tracked.

Oh, and I made a video showing how Keyboard Maestro made this way easier.

Findings on the Overall Data

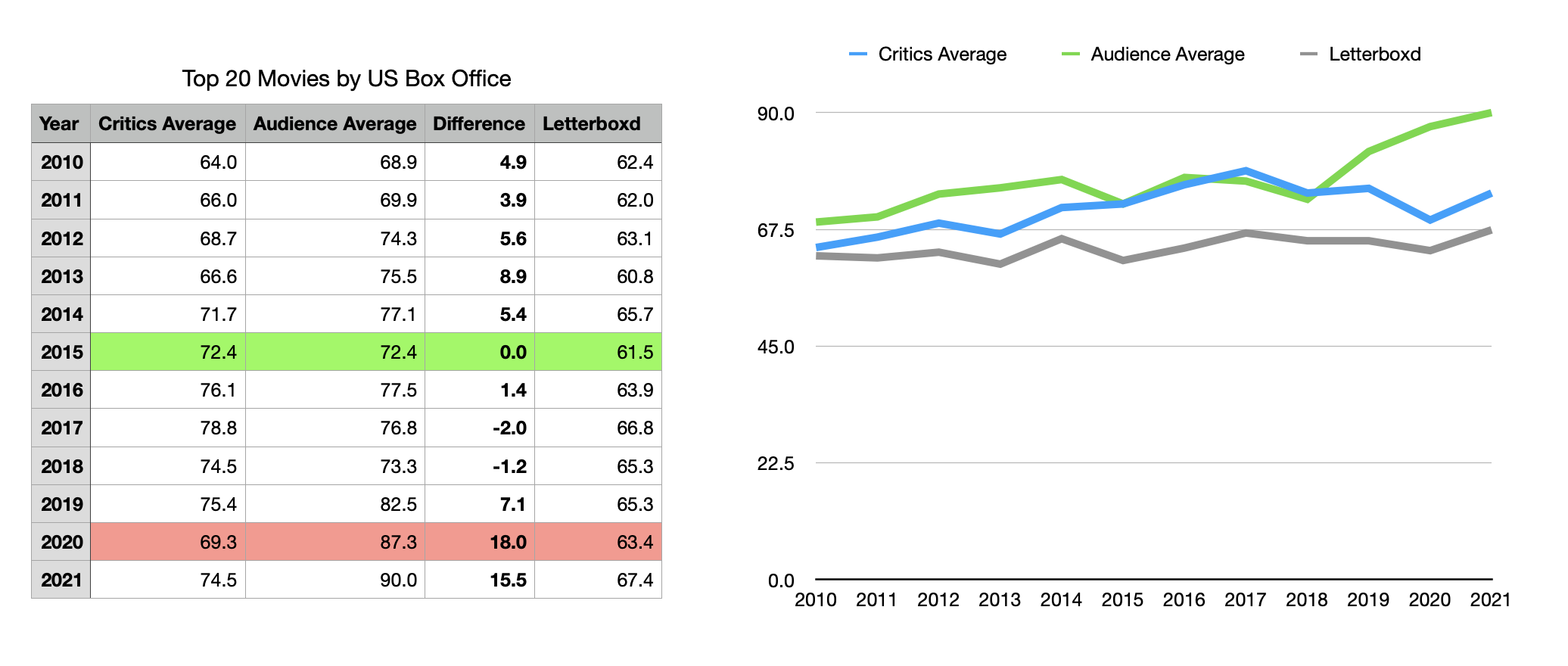

This chart and graph answers my original question best and has a few takeaways.

- In aggregate, critics and ordinary people are usually about 5 points apart.

- Usually, ordinary people review the highest-grossing movies higher. This makes sense as they are voting for these movies with their wallets, not just their reviews. Critics, meanwhile, see everything, so in theory, these films are about the same as everything else for them.

- 2020 and 2021 have seen a major divergence in scores. Critics were consistent, while audience reviews skyrocketed to the first 90% year ever.

- The audience scores for movies in 2021 are absolutely ridiculous. Either this was the best year for movies ever, or there's some phenomenon going on here I can't see in just the data.

- 2015 was a year of peace, as the critics and the audience agreed perfectly on the quality of the top 20 movies.

Number 3 is the biggest oddity to me, as audience scores skyrocketed! While the critics' average compared to 2017 was exactly the same, audience reviews are a full 17% more positive than they were just a few years ago. Why is this? I don't actually know, but I'd love to hear some theories.

One theory I have is that recency bias means people like these films more now that they're novel, and the scores will lower over time as people see these movies in 2022 and beyond and leave reviews later.

The Outliers

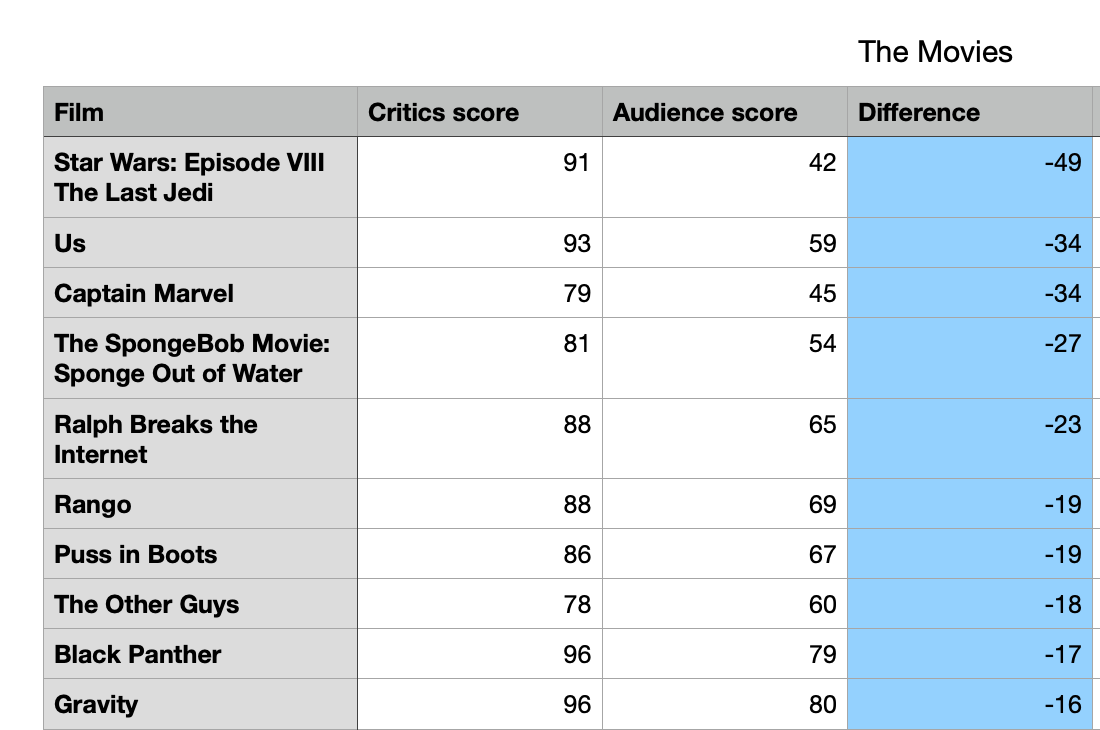

Here are the specific movies that had the biggest rifts between critics and audiences.

The Last Jedi might be an anomaly because review-bombing was a thing for that film in a way I have not seen before.

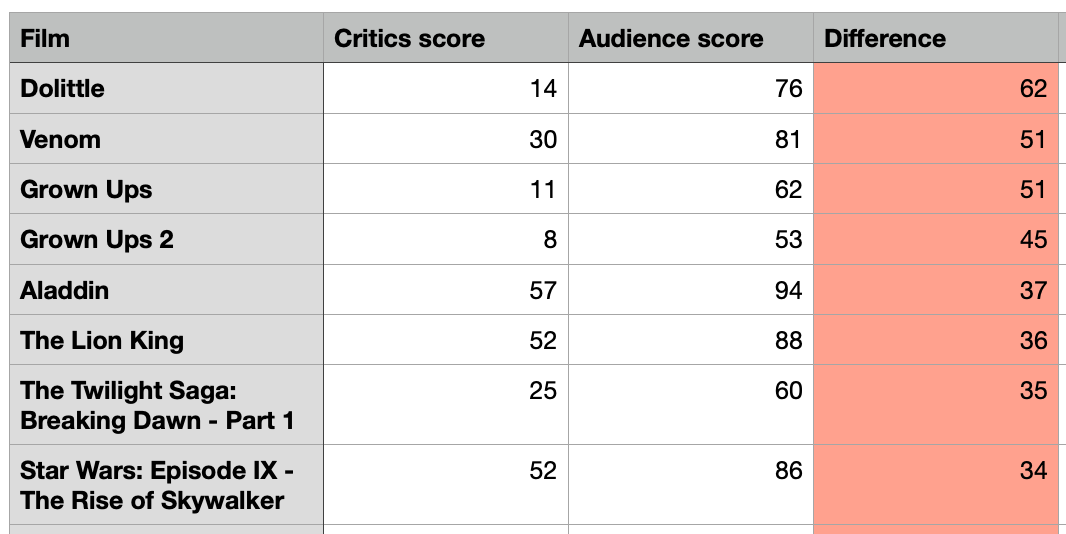

And here are the films critics liked way less than audiences:

Individual Film Notables

- Critics are much more likely to give a really low score to a movie. While critics gave 26% of these films below 60%, whereas one 14% of films got scored that low from the audience. Basically, half of people generally like every movie released.

- Interestingly, critics were also more likely to give very positive reviews to a movie. 24% of films got 90% or higher from critics, but only 14% of them got it from audiences.

- Spider-Man: No Way Home just came out and has the highest Letterboxd rating. If we assume that is inflated due to recency bias, then Get Out is the highest rated, neck-and-neck with Coco, Interstellar, Inception, and Little Women.

- The Last Airbender has the lowest Letterboxd rating by a mile, at just 1.1 (our of 5).

- 36% of movies have a 5% or less difference between critics and audiences.

- I'd say 15% difference is pretty similar vibes, and 72% of movies fell into that range.

- 30% of all top 10 movies have been comic book superhero movies. This doesn't count Star Wars or Fast and the Furious or sci-fi/fantasy stuff. In 2021, 5 of the top 6 movies were comic book superhero films.

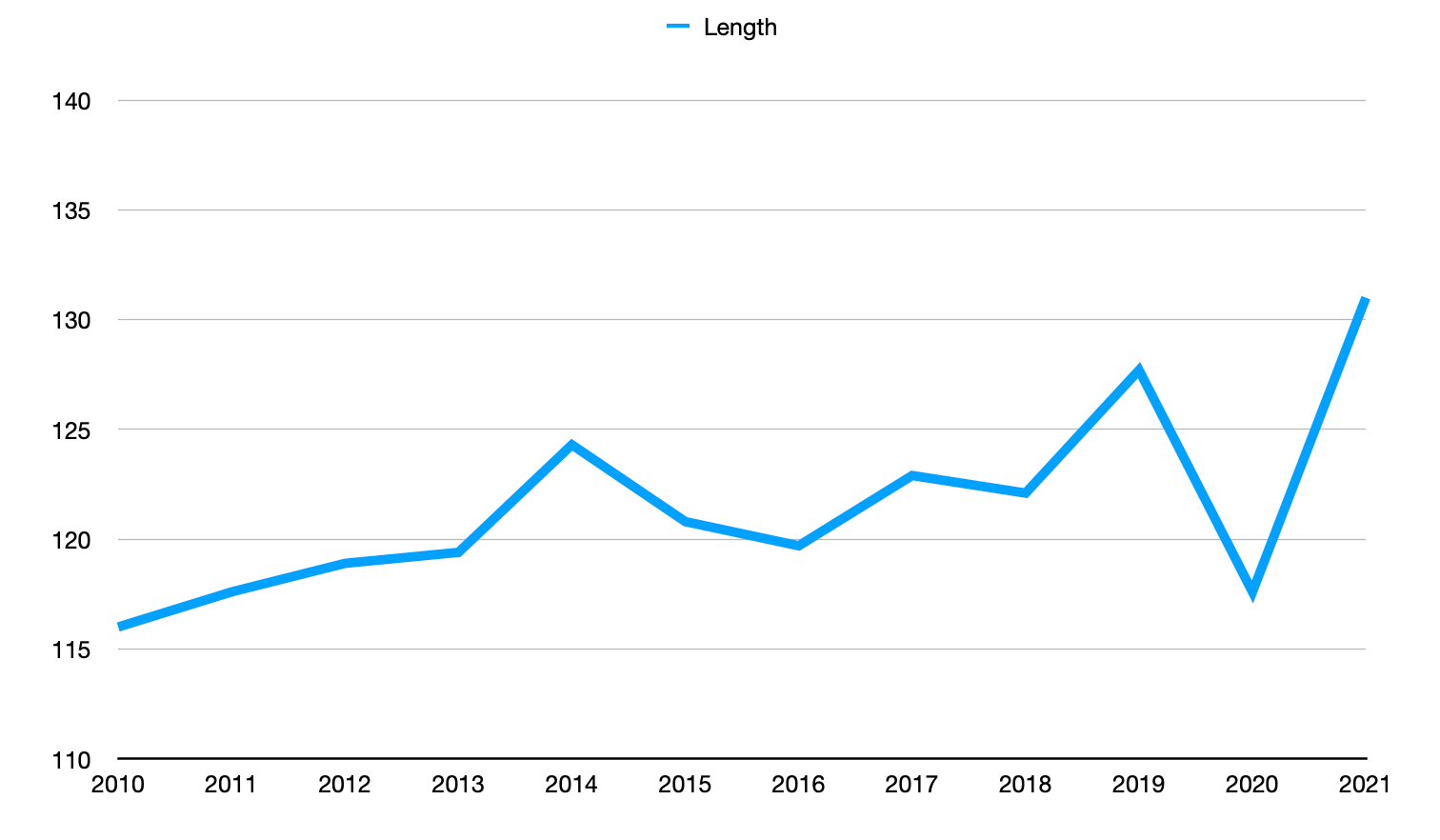

Are Movies Getting Longer?

According to my slice of data (popular movies over the last 12 years), the answer is yes. There's a bit of variance, but the general trend towards longer movies in the top 20 is impossible to miss.

Avengers: Endgame was the longest (and only 3 hour) movie to make the top 20 at 3 hours and 1 minute, while The Grinch was the shortest, at only 85 minutes. A Quiet Place and Gravity share the title for shortest live-action films, each at 91 minutes.

Takeaways

First off, don't think that you need to agree with the critics or the general audience. These scores are guides that can help you decide what to watch, but once you've watched something, who cares what they think?

Critics and audiences come from different perspectives, but they are trying to do the same thing. Critics see tons more movies, so they have more perspective on how movies stand up compared to everything else out there. That's a different perspective from someone who goes to the movies a couple of times a year.

This is only looking at the highest-grossing movies of the year, and since this is manual work to pull the data, I'm not getting more. I focused this on the most popular movies since those are the ones I normally see people going "critics just don't get it!!!" I'd love to know what the differences are for the 21-100 top movies, but I also want to have a life, so someone else will have to get that 😉

Finally, If someone disagrees with you about a movie, don't just look at a score on an aggregator like Rotten Tomatoes, actually read some reviews to understand your differences. I try to do this when I dislike a movie, but most people/critics seem to like it. I want to hear what they liked, and sometimes it helps me see the film in a different light.

Discussion