Google asserted itself as a new type of platform company at Google I/O 2017

I watched every moment of Google's I/O keynote with great interest for where the company is headed in the next year. I am a big fan of the company overall, but their platforms such as Android and Chrome OS do not appeal to me that much. I simply think iOS and macOS are superior phone, tablet, and desktop operating systems for just about everything I do, but Google is still a large part of my computing life on those devices. Going into this I/O conference I had 2 questions:

- How is Google advancing their cloud services to pull ahead of the competition?

- Does Google have a plan to enhance Android and Chrome OS in sweeping, meaningful ways?

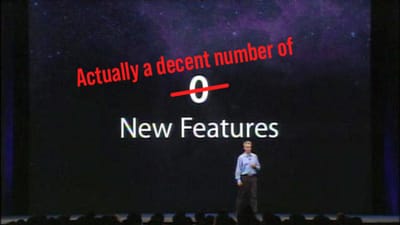

The answer to question 1 is that they're doing a lot in this area, and services are in good hands. The answer to question 2 is a resounding NOPE.

What I saw at Google I/O was a company who is focused on making their online services better today, and building out an infrastructure to make sure they can continue to innovate in the future. The conference kicked off with Google CEO Sundar Pichai waxing poetic about the scale of Google's AI operations, and how machine learning is making all of their products better. From YouTube suggestions to voice recognition to Photos search and more, machine learning is the lifeblood of this company, and it's what they are using to advance all of their products.

Google search continues to be the most important product for Google, and what we saw at I/O was basically all about search. I don't recall the Google.com page ever coming up on stage, but search was everywhere.

Google Assistant is the most important service Google has introduced in a decade, and the company is leaning into making Assistant the best way to do more than ever with your voice assistant, no matter what device you are using. They bragged about their speech recognition, which has dropped from an 8% error rate to 4.9% in 4 years. They talked about personalizing Assistant on the Google Home to recognize difference voices and give information according to that user (something Apple fanboys said was an unsolved problem and was a reason these products couldn't work well yet). They are adding a camera search function to Assistant on the phone to let you not only search for things you take pictures of, but also intelligently read the information in a photo and save it for you1. They are making Photos search better to the point where you will be able to select a bunch of photos (or search for them) and Google will create a photo album for your based on what it thinks are the best photos. There is a lot of stuff there, and if it all works well, it's going to be amazing.

This advancement of Google Assistant really made me think that Google's vision for the future of platforms like Android is not to have a bunch of apps, but to have the Assistant do as much for you as possible. By adding a smart camera to Assistant, they are making thousands of QR scanner and note-taking apps irrelevant. By adding reminders and shopping lists to Assistant, they're killing thousands more productivity and shopping apps.

And I see more of this coming down the line as Assistant gets more powerful. Email apps could easily be on he chopping block next. Imagine a future where many people don't need the Gmail app anymore because Google Assistant does this:

Message from James:

Hi Matt, I hope things have been well! Things have been going well for us, and we've been really busy with Sam's graduation coming up 2 weekends from now. I was writing to ask if you wanted to go golfing on Saturday at Fox Run. I can do any time between 10-2, so let me know if you're interested and what time would work for you. Best, James

What if Google Assistant could tell you there is an email from James like this:

James sent you a message. He hopes you are doing well. Sam is graduating on June 3. He would like to arrange golf with you this Saturday at Fox Run Golf Links in Elk Grove Village between 10am and 2pm. I see there are open tee times available right now. Would you like to say yes?Yes, and could you reserve a tee time for 11am?

Ok, I have reserved a tee time for 2 at 11am at Fox Run. I've included that in the reply to James.

That is a somewhat simple example, but it's the sort of thing that a real assistant could do for you, and it would truly be another giant step towards digital assistants being more useful. Additionally, that example was done via voice, but it could also easily be done with Google Assistant's text input as well. That whole interaction could be done with a few taps in the Assistant app without saying a word.

This vision for the future is interesting, and could be very impressive if Google can pull it off, but it was a weird message to be sending at a developers conference. Maybe I'm too used to seeing Apple and Microsoft conferences where they speak at length about how they are building more and better tools for developers to tap into their platforms and create more value for their users, but Google gave almost nothing to developers in their 2 hour presentation. Everything was about Google own services and how they are improving. It's very cool that Google Photos is getting better editing tools and that Google Home is adding voice calls, but what does that give developers? Nothing.

Even the company's talk around Android was mostly about how the features they are adding that make the system better, not tools that developers can use to make their apps better. Even things like Autofill in Android were undermined by Google announcing "Autofill with Google" will be there by default and will have all your Chrome usernames/passwords already there to log into apps, even if the apps have not built in support for Autofill. Why would most people use 1Password or LastPass when Google already has this working out of the box? Other enhancements like OS optimizations, security enhancements, smart text selection, and notification dots are system updates that developers don't need to do anything for. Essentially the apps will run slightly faster than before, but there are no new system hooks to enhance functionality. I need to watch some of the developer sessions today and tomorrow to see what may be new that they didn't talk about at the main event, but there didn't seem to be much there.

The only significant advance I saw for developers was the availability of TensorFlow for all developers, which will give average joe developers access to some serious machine learning smarts. Based purely on time spent in the keynote, this is what Google is most interested in, and it's what they see as the biggest advance for developers. I don't know if this will actually be useful to the average dev, but I have hopes that smart people start using this newfound power for something clever.

Google's keynote was not particularly thrilling overall, and I was really struggling to make it through at some points in the show. Some products like Photos and Assistant are adding some really cool features, and as a user of both, I'm excited to see them come down the pipe. But their general lack of exciting updates for their device platforms, Android in particular, was disappointing. I'm no more likely to look at Android as my next phone than I was before I/O, and that's a little disappointing. That said, Google made a strong case that they are the king of consumer-oriented artificial intelligence and machine learning, and it's an argument I don't foresee Apple trouncing at WWDC next month. Then again, basically everything cool with machine learning Google showed off will be coming to iOS software as well via third party developers and Google's own apps, so I don't think iOS users will be missing out on much here.

There's more I/O to come in the rest of the week and I have a lot more to take in, but I wanted to take a little time today to get my initial thoughts out there.

- As an example of this, they showed someone taking a picture of their serial number on a router. Google Assistant recognized it was a serial number and saved it to Google Keep and labeled it as such automatically. Really clever stuff. ↩