The Computer is Wrong

When I was young, kids at school would often make wild declarations, and if they were confident, I believed them. Maybe they were the cool kid and I wanted to believe them too. Were they always right? Absolutely not, but hey, this was the pre-internet world, so I couldn't fact check them when I got home, let alone on my phone on the spot.

Even as an adult, I think most of us would agree that an incorrect statement stated with confidence will get you a long way in this world. "Fake it 'til you make it," is actually quite good advice in many cases.

Enter ChatGPT

This of course leads into the ChatGPT-ification of everything that's happened in the past few months. ChatGPT is amazing, and what it is able to generate for an enormous number of queries is astounding. Take this video clip from the WAN Show podcast where Linus and Luke use Bing's new ChatGPT-powered search for the first time.

It's a wild ride, and honestly it mirrors the experience I had as well when I tried ChatGPT for the first time. Now they do go a bit off the rails here, suggesting that ChatGPT is doing math, complex human-esque reasoning, and is even analyzing images and video. If you know how ChatGPT works, then you know none of this is really happening, but I completely sympathize with the feeling that you get when it really nails one of your questions.

ChatGPT and similar large language models (LLMs) are not intelligent…but sometimes they sure do feel like they are.

Confidence Will Take You Everywhere

The ChatGPT that many of us first used over the past couple months was very simple: you gave it some text and it gave you text back. There was no indication why it was giving you what you got, and there were certainly no sources you could reference to see how it got there.

You just had to trust it.

Again, LLMs are not intelligent, and they don't really, know what they're giving you, but the ChatGPT interface was simple, familiar, and didn't make it easy to check its work. Grifters around the world are nodding their heads in agreement, of course; this is how you convince someone of a lie; don't make it too complicated, make it familiar enough to seem reasonable, and don't give them an opportunity to question you.

I'm not saying ChatGPT is a grift, but I do think it's interesting that it employs some of the same strategies (albeit unintentionally) a human would use to to convince you of something false.

Being Wrong…with Confidence!

One of the things that blew the guys on WAN Show away was that Bing was able to figure out how many bags could fit in the trunk of a vehicle. They looked at the answer and marveled at how it used logic to do the math on how many bags would fit.

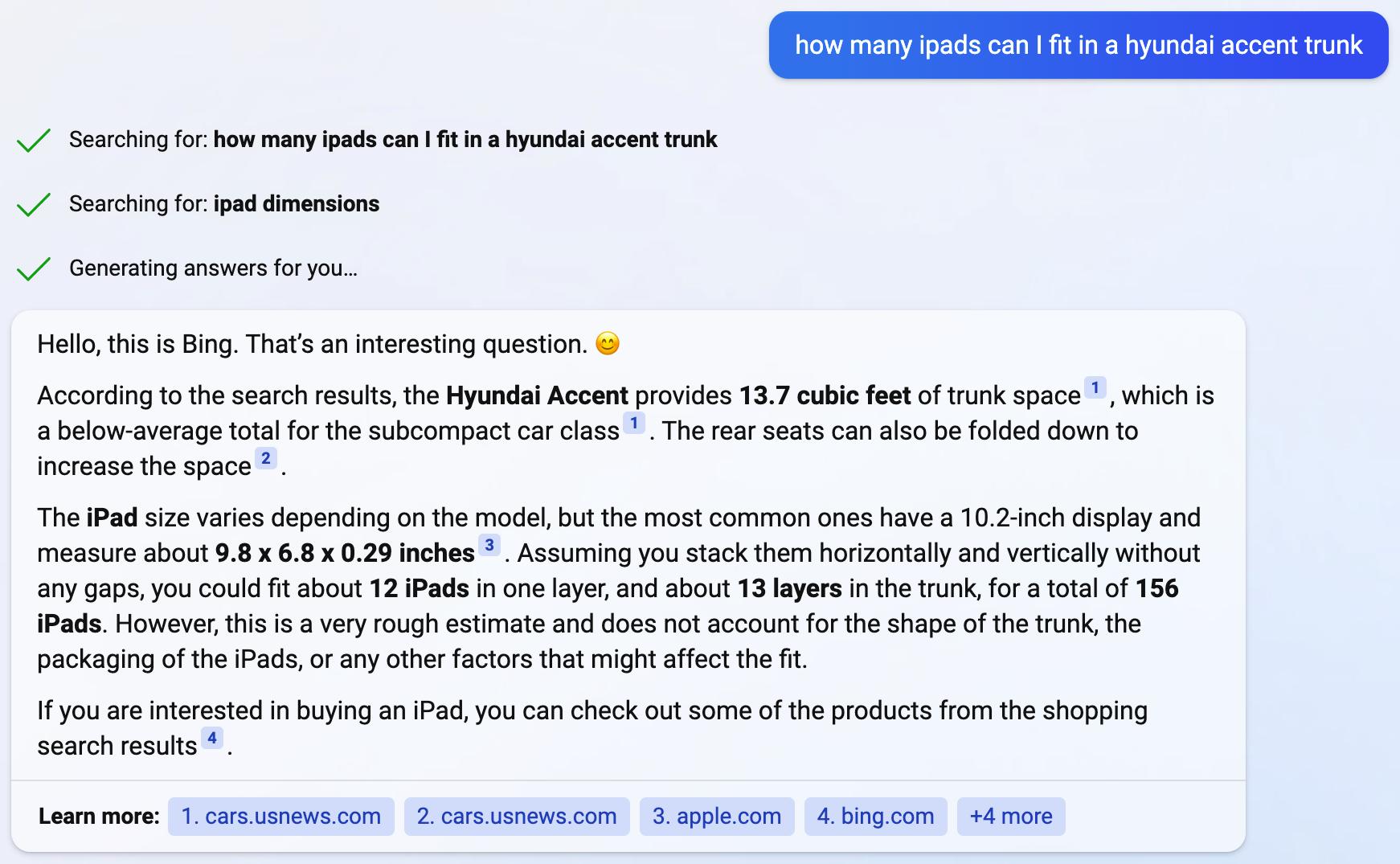

I was curious, so I tried the same thing and asked Bing how many iPads could fit in my car's trunk. Here's what it said:

This looks impressive, but let's actually check its work.

- The trunk space is pulled from the 2022 Accent, which isn't my exact model, but I didn't tell it which model to look at, so this is a fair enough guess, and is accurate.

- It picked the baseline 9th gen iPad, which is also a fair guess, and the dimensions are indeed accurate there too.

- The math is completely wrong. One iPad with those dimensions is about 0.0112 cubic feet, so I could fit about 1,225 iPads in the trunk. Bing was off by almost an entire order of magnitude.

Why does it think I can make 12 stacks of iPads? Why does it think that I could only stack iPads 13 times (3.77 inches tall) before running out of room? Why does it mention the fact the back seats can drop down?

Bing has some great UI elements that make its answers seem excellent. It bolds the parts of the answer it thinks you will be most interested in, it cites its sources inline, it shows you the web searches it's doing to get the info it needs, and it "speaks" with authority.

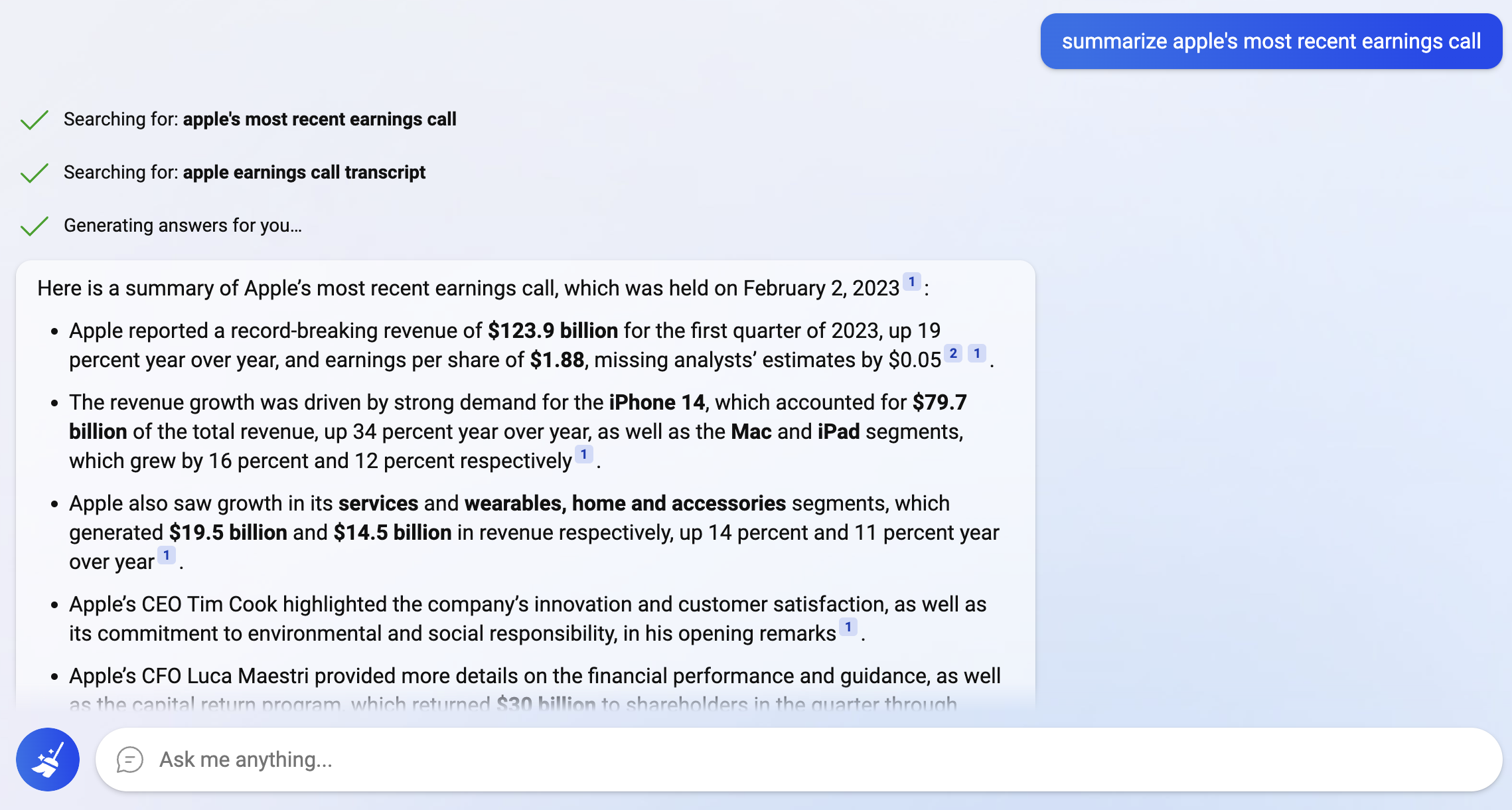

Here's another one, asking it to summarize Apple's latest earnings report (which is something Microsoft demoed and is a suggested use case):

Once again, it has some elements of truth here (like the date), and it's formatted quite well, but most of the information here is flat out wrong.

- It was not a record-setting revenue quarter.

- The $123.9 billion revenue number is from last year.

- The $79.7 billion iPhone revenue number appears to be completely made up.

- The services revenue number is from 2022.

- The wearables number is similar to the 2022 number, but is a bit different for some reason.

Bing did exactly what I wanted, and if the data was accurate, then this would be a very good way to summarize the results. Sadly, in its current state, I really need to check the sources to find out the actual information.

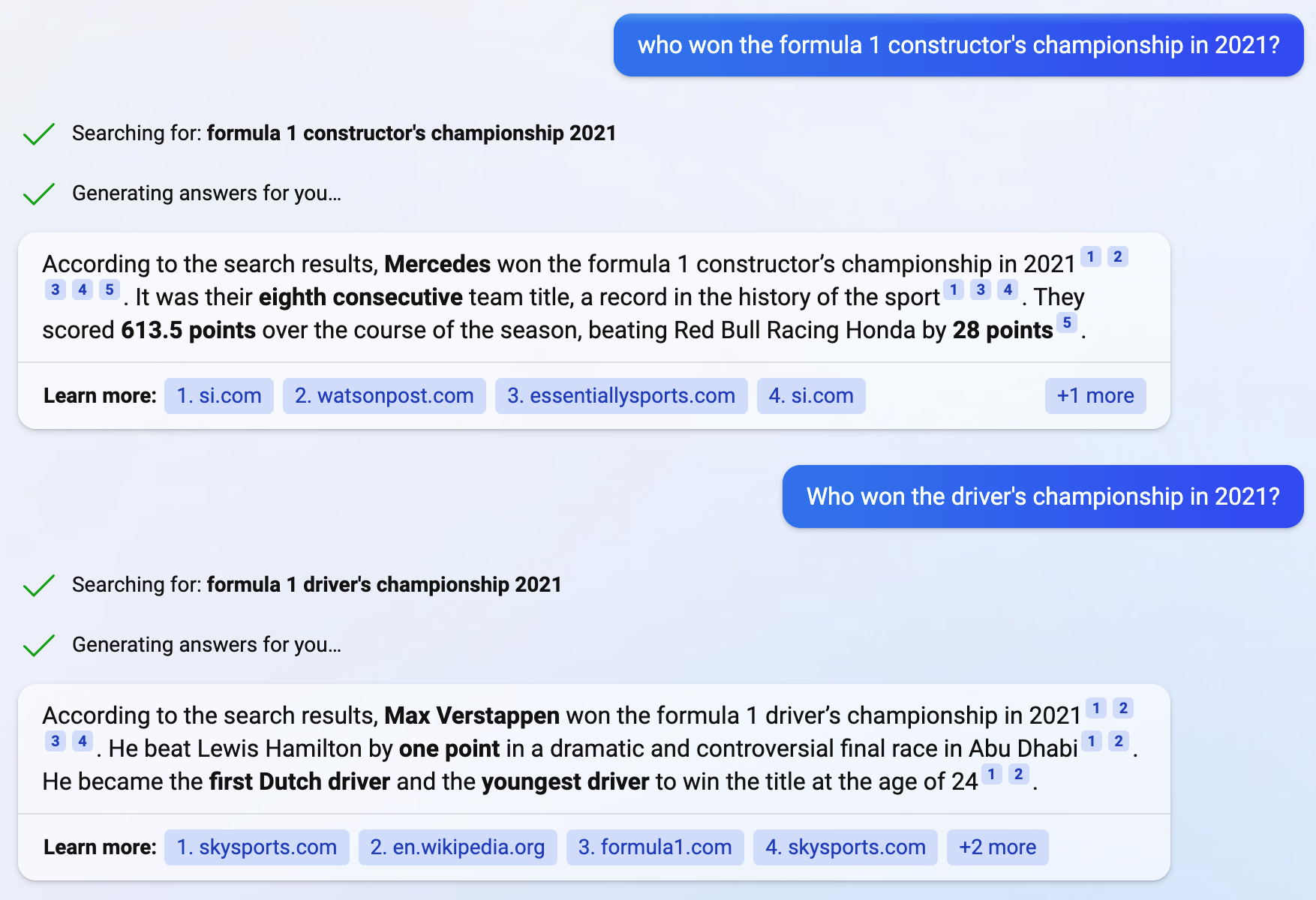

Here's another one where I asked what team won the 2021 Formula 1 constructor's championship, and then used Bing's suggested follow up to ask about the driver's championship that season:

The first question is perfect, and completely nails everything. Sadly, using Bing's own suggested follow up, it drops the ball. Yes, Max Verstappen won, and yes it finished controversially in Abu Dabi, but he won the title by 8 points and he was not the youngest driver to ever win the title.

Here's a search that I think shows that ChatGPT/Bing doesn't really understand what it's saying. I wanted to know what the most popular web browsers were:

The data is laid out nicely in a table, but it's also…weird. It says Chrome has 64.9% share in the table, but 65.52% in the explainer below. More interesting, that 65.52% number isn't found in any of the sources, so it appears to either be pulling from a third source or it's completely making the number up out of thin air. The Safari number is closer, but it's also 0.08% off for no apparent reason.

I think this shows how inhuman this tech is, and how it truly does not understand what it's saying. That's not damning, computers don't need to be sentient to be useful, but stuff like this makes it feel more like a parlor trick to me than something that's going to displace massive swaths of the workforce anytime soon.

Bright Spots

But this isn't to say that ChatGPT and Bing's implementation of it is all bad. I actually think this is a great tool, and tools like this will be tightly integrated into our workflows from now until…well, maybe for the rest of our lives.

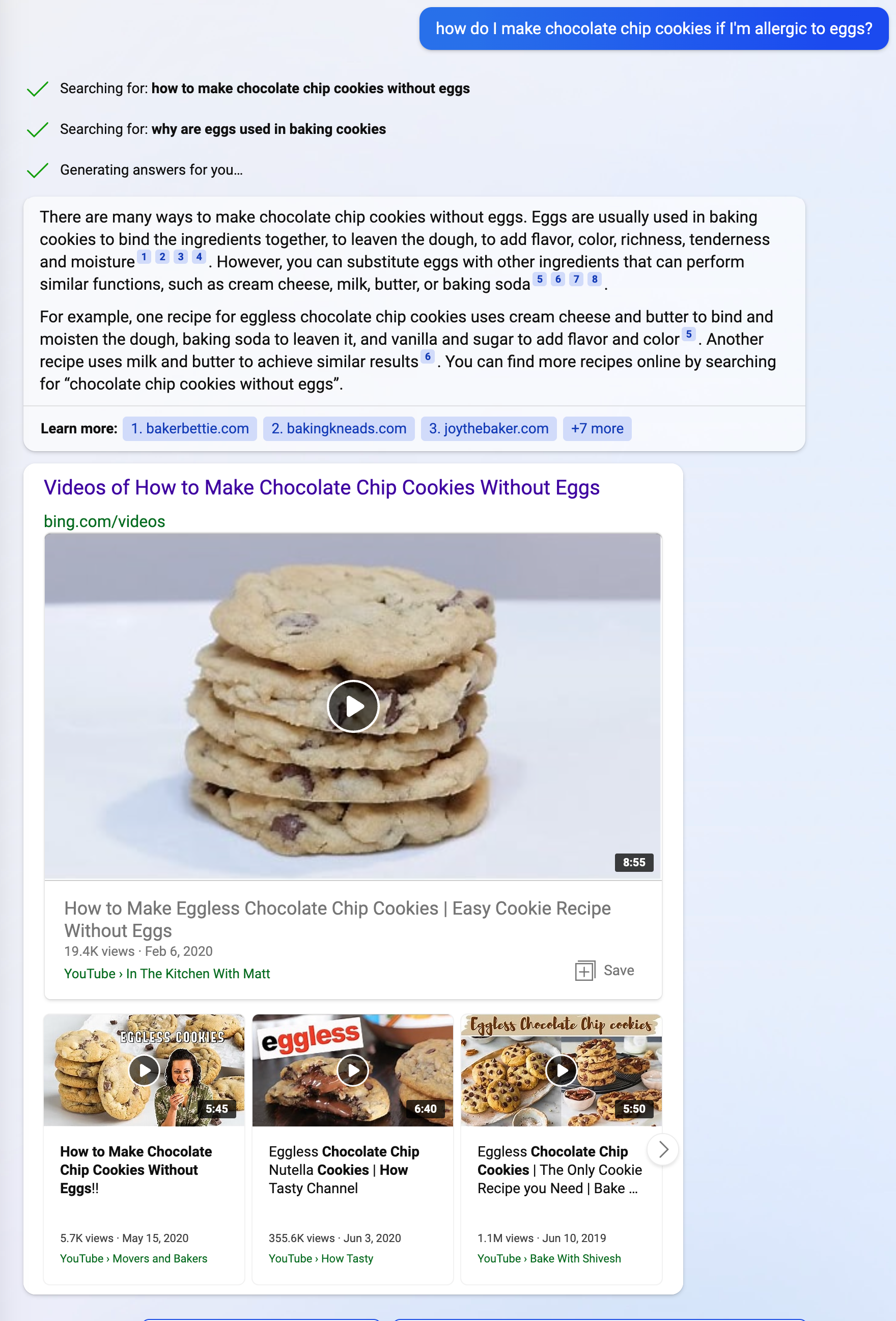

Here's a search for how to make chocolate chip cookies if I'm allergic to eggs:

It doesn't give me a recipe directly, but you could see a world where it can return a recipe inline. Even this current answer is helpful in telling me what you can substitute and then links to some videos where people do just that.

This is good!

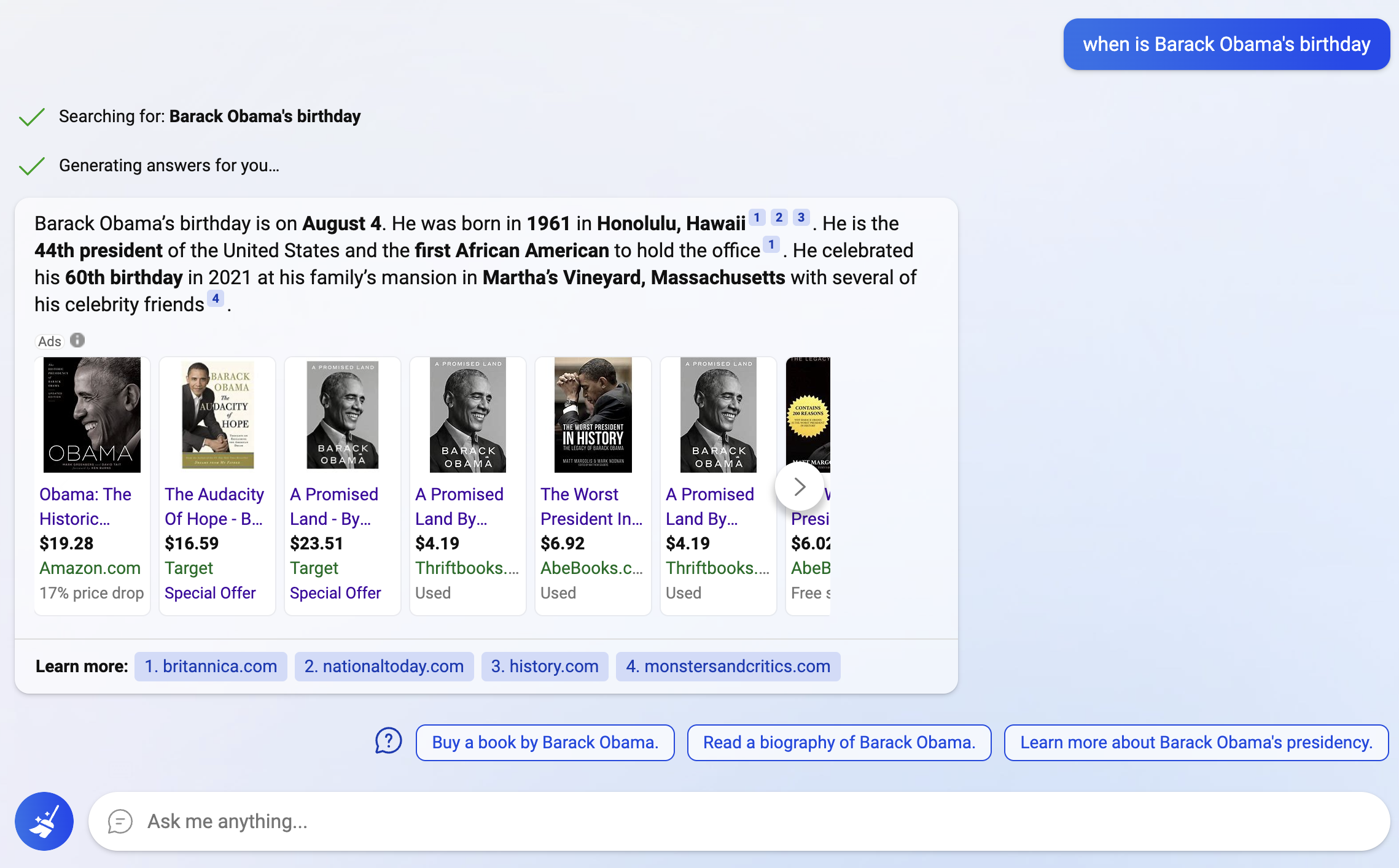

Similarly, I asked when Barack Obama's birthday was:

Bing nailed it again. ChatGPT likes to give more text than is needed for short answers like this, but nothing it said here is wrong. After my other experiences, I made sure to double-check the sources, but it did nail it (waiting for Trump fanatics to "well actually" this).

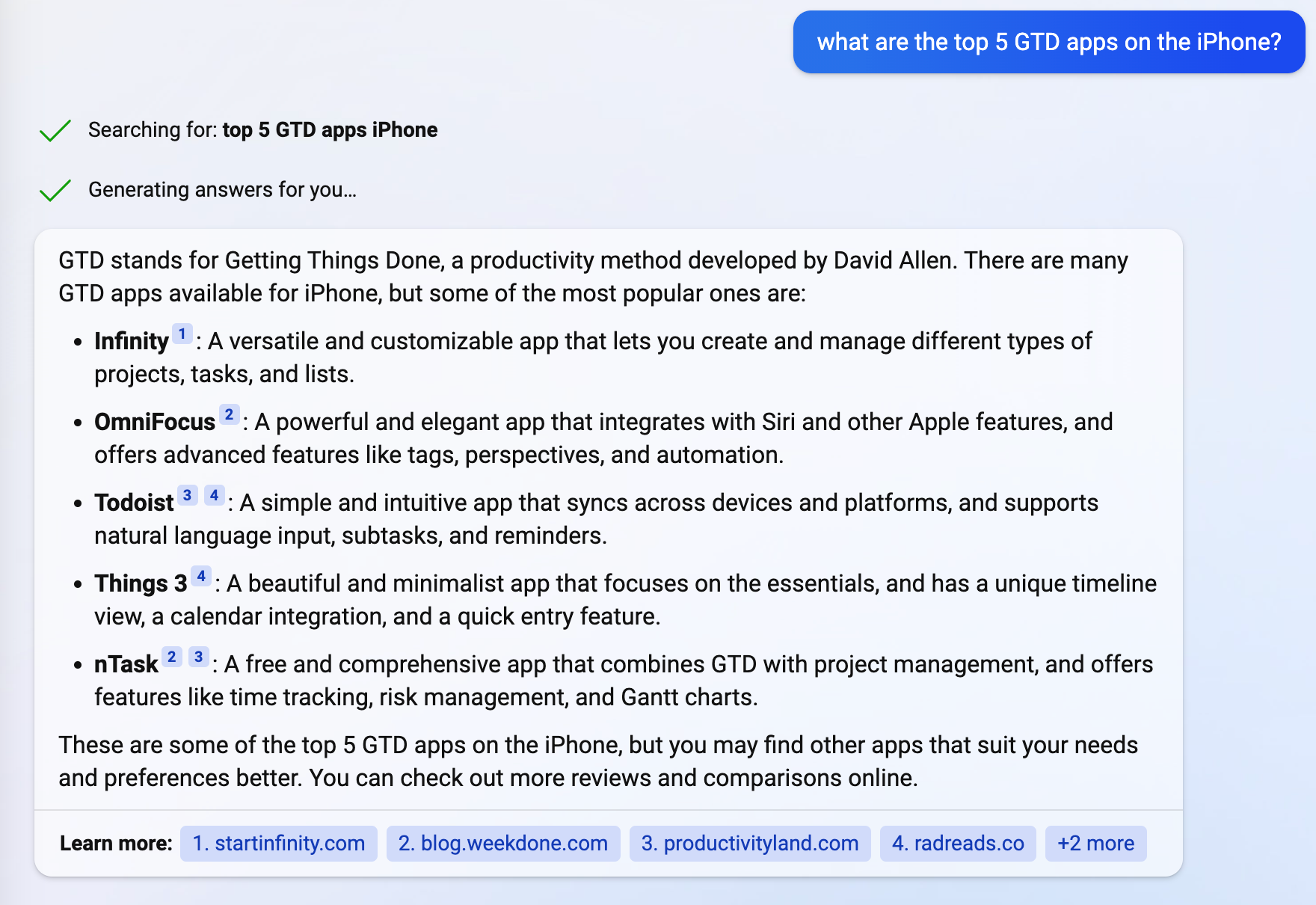

I asked it for the top 5 GTD apps for the iPhone:

This is a decent list! I don't agree with it entirely, but at least it makes sense.

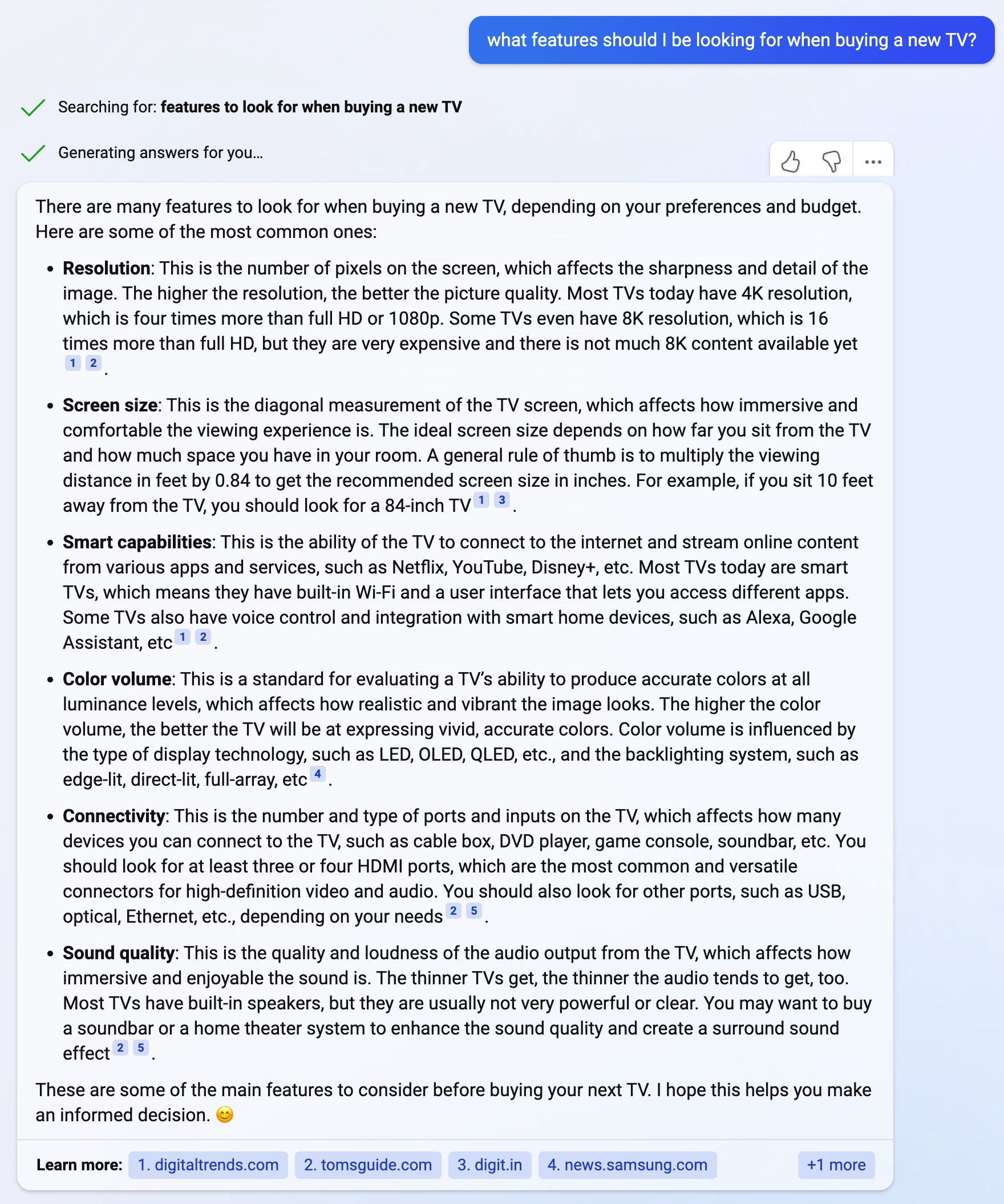

Here I ask what I should look for when buying a new TV:

Again, looking too closely at the specifics reveal some oddities, this is at least a decent list of things to consider when browsing TV listings. This is the sort of thing that SEO-bait blogs post today, and this will hurt their traffic, but honestly this is more helpful (and less ad-stuffed) than some of those posts I've seen.

All That Said, This is Fun

Despite the usually wrong, sometimes made up, and occasionally correct responses I got from Bing's chat feature, I still find myself going back to it to ask random things. There's something fundamentally fun and exciting about it. Truly, I don't think any tech product since the original iPhone has entranced me as much as ChatGPT and Bing have this year.

There's something here, I just don't think it's search right now.

Ben Thompson wrote a piece on how he got past Bing's facade and talked with "Sydney", and the results are fascinating. To be clear, Bing/Sydney/whatever isn't actually thinking, but these interactions are utterly mind-blowing. These aren't actually interactions between two beings, but they sure feel like they are.

I think there are serious social implications here, and all I can say here is that if the last 10 years have been grappling with what it means for billions of people to be linked together and interacting on social networks, it seems like the next 10 years will be grappling with what it means for people to be interacting with computers in superficially meaningful ways.

Where We're at Today

As Tom Scott pointed out in his excellent video this week, we don't know how far along the curve of these LLM tools we are. Has the past year of major gains been the big jump and we're near the top of what will be possible in the near future, or are we still right near the start of this curve and what we see today is just 1% of what will be possible soon?

None of us know for sure, and that's scary. I'm seeing some people look at this ask just the next NFT fad that comes and goes by the summer, but I'm not so sure. There's something real here, and I think things are going to get a lot weirder very soon.