The Future of Multi-Camera Smartphones

In 2018 you have many options when it comes to smartphone cameras. Since the iPhone 7 Plus in late 2016, there has been a trend towards dual-camera setups1. Basically any phone that’s serious about cameras has 2 lenses on it nowadays. That is, with the exception of the Huawei P20 (3 lenses) and the Google Pixel (1 lens). So where are we heading?

I have been very open with my belief that dual camera setups, especially those where the second lens is telephoto, are the way to move forward. On the one hand, you get more data on every photo you take because both lenses are capturing slightly different data and software can combine them into one image that is better than what either of the lenses could do on its own. Additionally, the addition of “portrait mode” is very cool and lets people who have never owned a fancy camera before take portraits like they’ve never been able to before. And finally, having a lens that can zoom without simply cropping in on a photo is incredibly useful for so many real life situations where you want to get closer to the action without turning your images/video into a pixelated mess.

On the other hand, I have been using the Google Pixel 2 for the better part of a year and its single lens setup gets some of the best photos I have ever seen on a smartphone. In almost every test I throw at it, it’s neck-and-neck with the iPhone 8 Plus that I also carry around. This has given me a bit of pause in whether my previous belief that multiple glass lenses were required actually held water. Was it just a matter of better software, hardware be damned?

The Pixel 2 inspires confidence in smart software replacing the need for multiple lenses. If we can use processing software to reduce noise and sharpen edges, that’s a hell of a lot more efficient than building 2 lenses. If we can figure out depth with a single lens instead of 2, why not? At its best, software makes hardware irrelevant, so this seems like a natural direction to go.

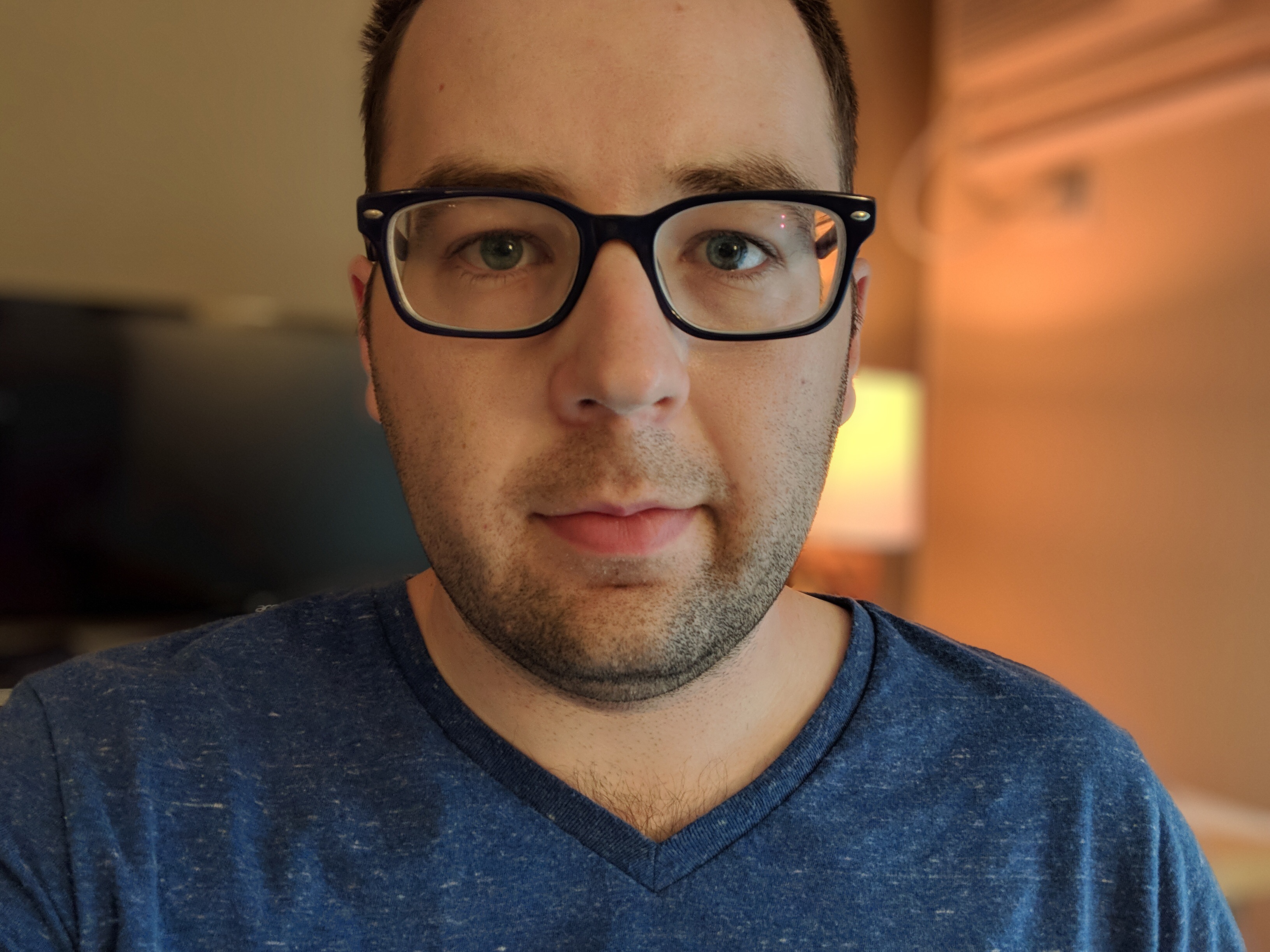

I don’t think we’re there yet though. Take a look at this photo using the zoom on the iPhone and Pixel:

The iPhone resolves more color and detail while introducing less noise to the photo. I also see value in the telephoto lens when it comes to portraits. Portraits are about more than just a blurred background, they’re also about perspective. Take a look at the 2 photos below:

The first image was captured with the Pixel 2 and the second was with the iPhone 8 Plus. Now the Pixel 2 does a better job of capturing detail (look at the stubble!), but I like the look of the iPhone’s image more. The longer focal length makes my face a totally different shape that most people find more flattering. This is why you almost never see professional photographers using a wide lens to take photos at weddings or other events. Wide angle lenses are great for everyday shooting, but portraits of people tend to look worse. The Verge recently ran a story about this called Your nose isn’t really as big as it looks in selfies which does a pretty good job explaining why we don’t feel like we look the best in wide angle lenses.

Now of course software will probably catch up here. While the tech of 2018 is not there yet, I can clearly see a future where smart software is able to resolve more data from photos and make “zoomed” shots look just as crisp as those show with a truly longer lens. There is already photo software that is trying to make the “big nose” problem of portraits from wide lenses better as well.

Software will surely solve the problems single-lens camera setups have today, but I wonder how much faster multi-lens camera systems advance at the same time. If by 2020 we have software that makes single cameras fix all the limitations they have today, that’s fantastic, but it’s only as exciting as it compares to how multi-camera systems evolve over that same time period. One would assume that it will progress at a similar rate and the gap will remain, but we just don’t know right now.

For my part, I still prefer the dual-camera setup, but the Pixel 2 has made me appreciate how much can still be done with just one lens. As for how many lenses my phone in 5 years will have, I have no idea.

- Although the HTC One M8 did this first back in 2014 with their shitty 4MP sensors. You did get some really bad depth effects even back then. ↩

Discussion