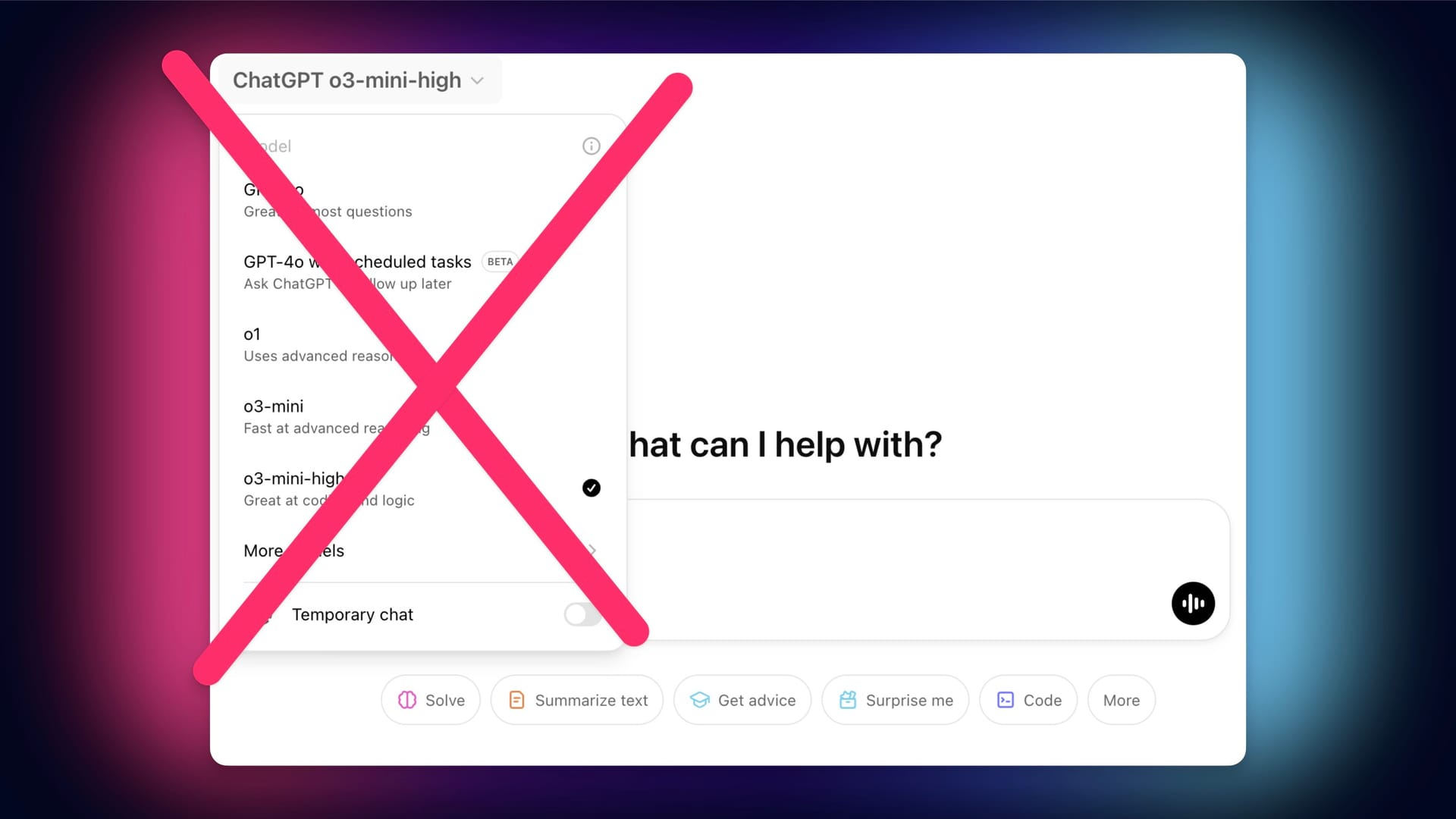

This menu needs to die

M.G. Siegler: The Great AI UI Unification

And depending on which model you choose to use, you get other options from there, as various buttons will be enabled in the message box for 'Web Search' and now 'Deep Research'. Oh and I forgot the '+' button, which has more options depending on where you're using it. And, of course, there's the '...' button in this box too, giving you a sub-menu for the 'Image' or 'Canvas' options. Oh yes, and Voice Mode. (And voice transcription on certain devices.)

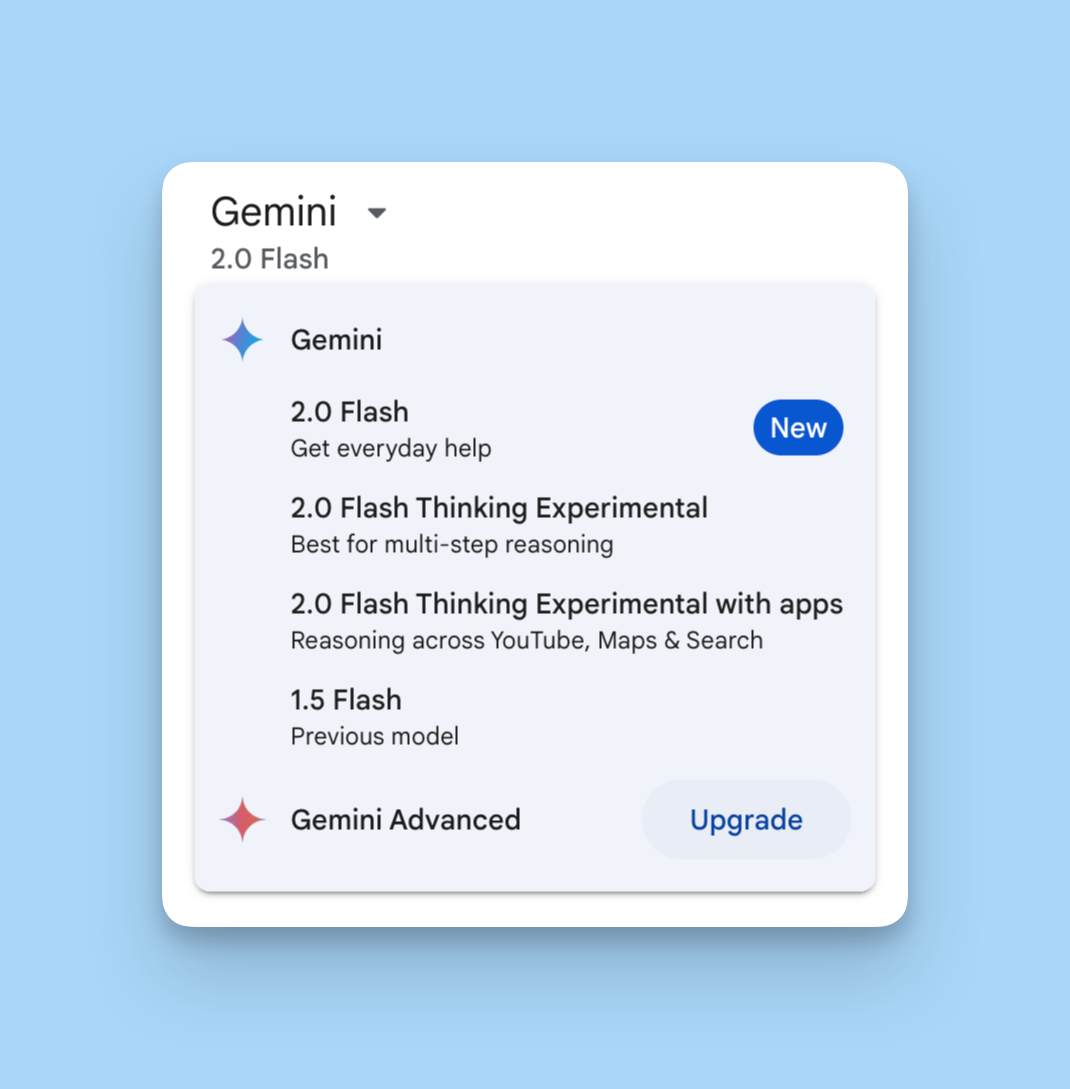

Here’s what you see in Google Gemini:

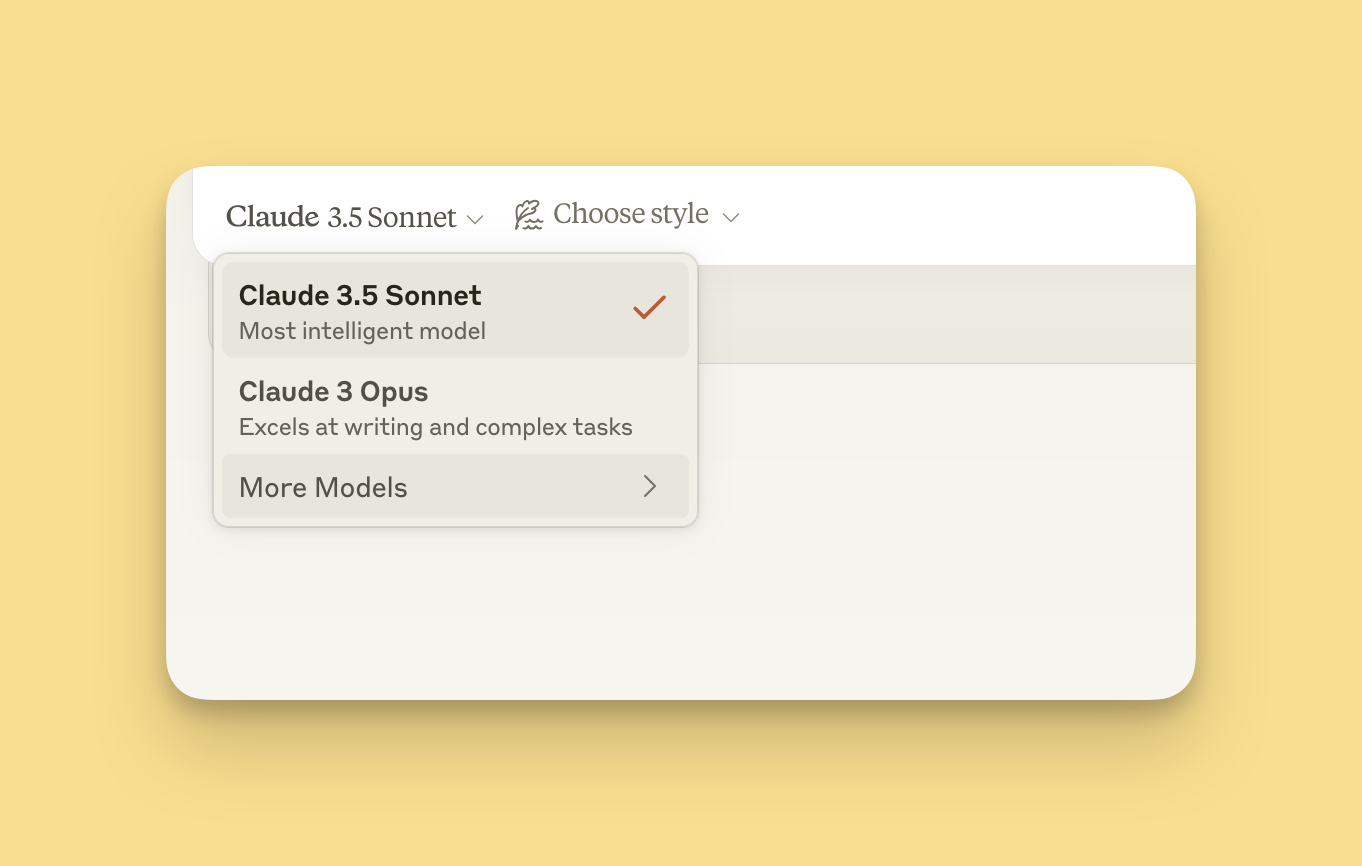

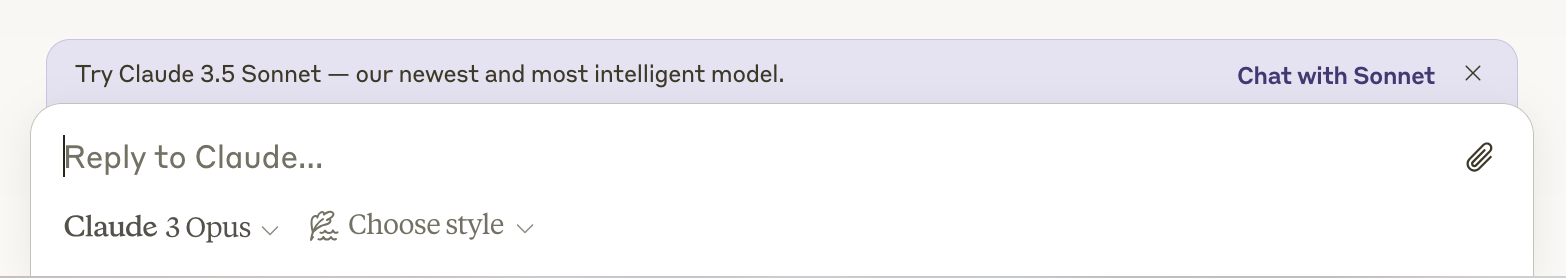

Here’s Claude:

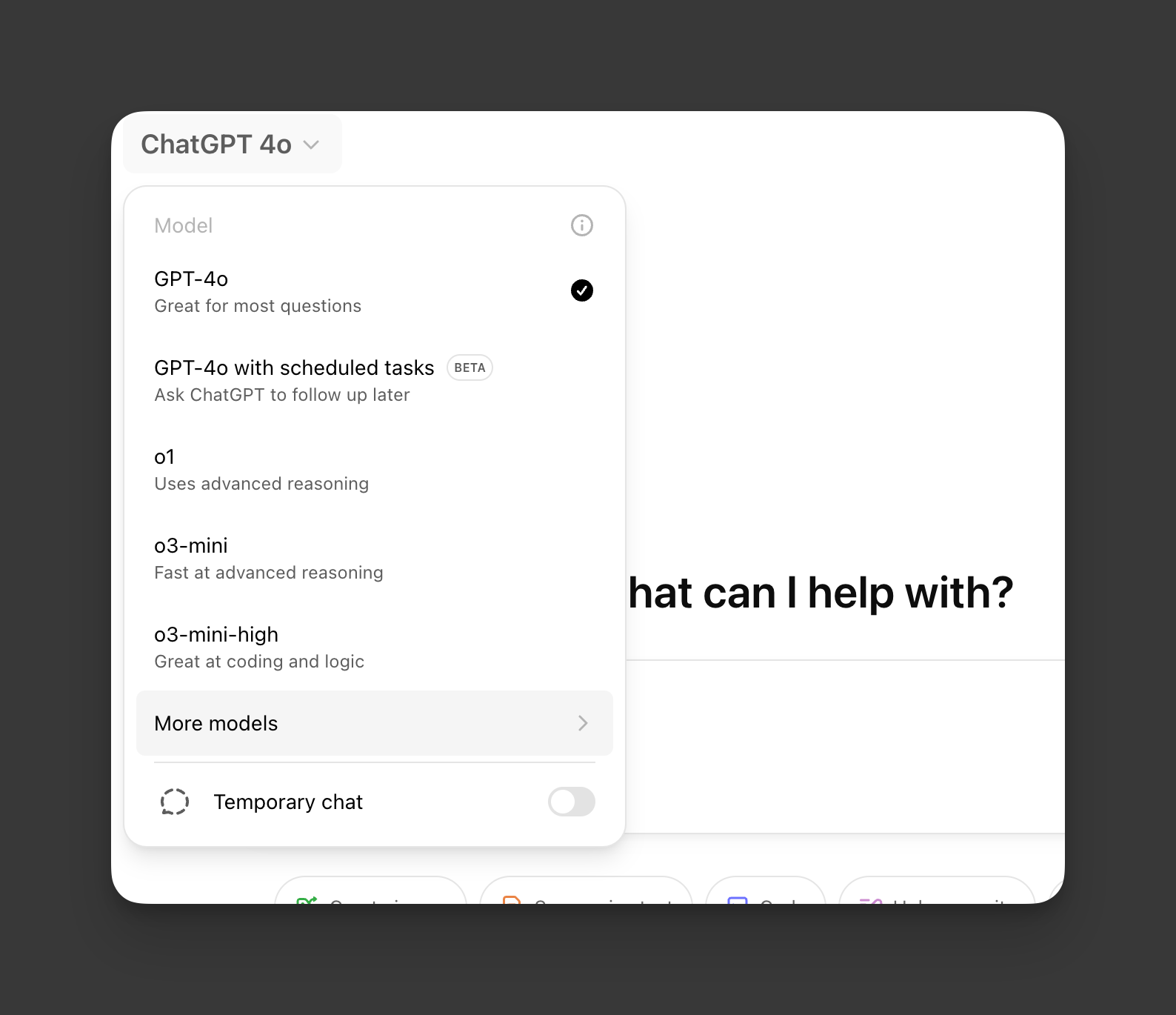

And here’s ChatGPT:

I think this is insane and needs to end as soon as possible. In my most recent video, I talked about how if I’m going to use an LLM, I always want to use the best model. The problem is that what “the best model” is is not very clear, even to people like me who are more up on what these models actually are than probably 99.9% of the population.

Looking at ChatGPT alone, if I have a question or a task I want it to do, what model should I pick? I know that o1 is newer than 4o, and o3 is newer than both of them, so I should go with o3, right? Well, o1 is their “full size” model and o3 is only available in it’s mini variant – is a newer mini model better than the last-gen full model? Should I go with o3-mini or o3-mini-high? One specializes in “logic” and the other specializes in “reasoning”. What the fuck is the difference between logic and reasoning? And oh shoot, neither o1 or o3 supports web search, so forget everything else, if I want sources or any up-to-date information, I need to use 4o anyway.

That’s a mess.

Claude probably does it best by defaulting the user to their best model and making no bones about which model is the best…it’s 3.5 Sonnet, and there’s basically no reason to use anything else. They’ve got 3.0 Opus in there as well, but it’s pretty clear that 3.5 is better, and honestly I’m not entirely sure why Anthropic leaves this around for easy access since whenever you use it the UI tells you you probably want to be using their better model.

And then there’s Google which is just fully unhinged. I’m not even on the paid tier with all models, but it seems to me there is no reason to ever use anything besides “2.0 Flash Experimental with apps,” right? Why would I ever use Flash 1.5 when Flash 2.0 is right there?

As M.G. points out, these are quite reminiscent of how search engine pages used to look before Google. It seems obvious now, but one of Google’s big innovations in the early 2000s was that it was just a text box you entered something and it figured out what you were looking for. Enter a product, get shopping results. Looking for something that’s been photographed a lot, get image results inline. More recently, ask a question with a simple answer, get the answer right at the top of the results page. The other guys made you check some boxes or select from some drop downs or even go to other products entirely to do all this, but Google made it easy. We need to get to this with chatbot interfaces today.

The good news is that this seems to be something OpenAI and Anthropic are working on already. Word on the street is that Anthropic has been slower than others to get their next model out the door because this is a key component of what they’re working on unifying everything in a way that won’t make users choose a model at all. And Sam Altman has said that after they ship GPT 4.5, they’ll work on getting rid of this complexity as well.

We’ll see how close they get to this, but it’s gotta get better than it is now.