What people do with an empty text box

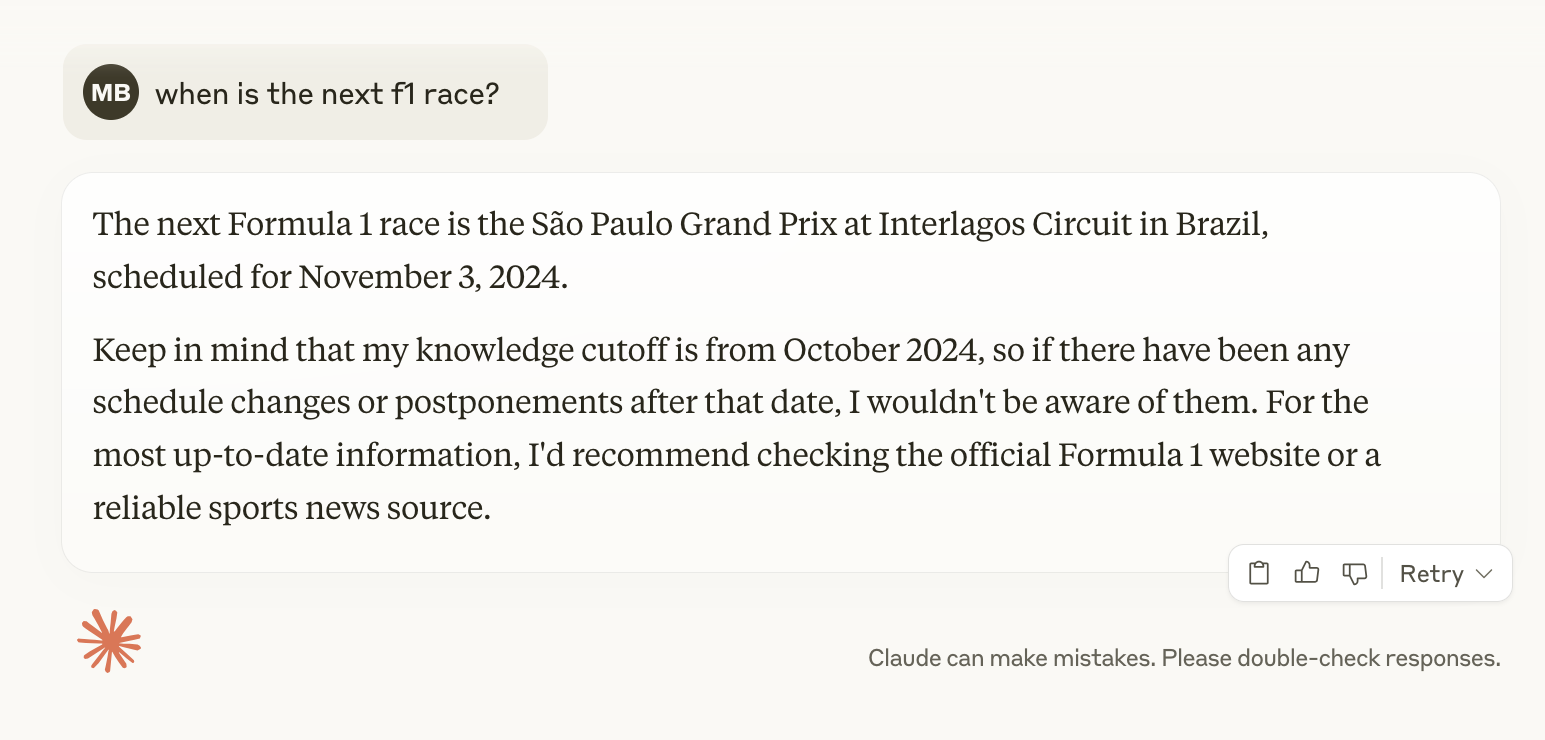

Update: literally minutes after posting this, Anthropic added web search to Claude. This improves the situation for Claude, but other models and apps using Claude/GPT/etc. still have the issues below.

I was listening to Hard Fork this weekend when Kevin Roose said this (emphasis mine):

I think that there is sort of a basic assumption if you're a heavy user of, say, ChatGPT, that there are certain things that it's good at and there are certain things that it's not good at. And if you ask it to do one of the things that it's not good at, you're not going to get as good of an answer.

I think that most people who use these systems on a regular basis kind of understand what they are good and not good at doing and are able to skillfully navigate using them for the right kinds of things.

I think people at large have always had misguided impressions of how computers work, and I would not assume that most people using ChatGPT are well aware of what it’s good at or what limits they should put on the trust they put in it. I also don’t think everyone has a good understanding of why an LLM might give them a radically incorrect answer to what they think of as a basic query.

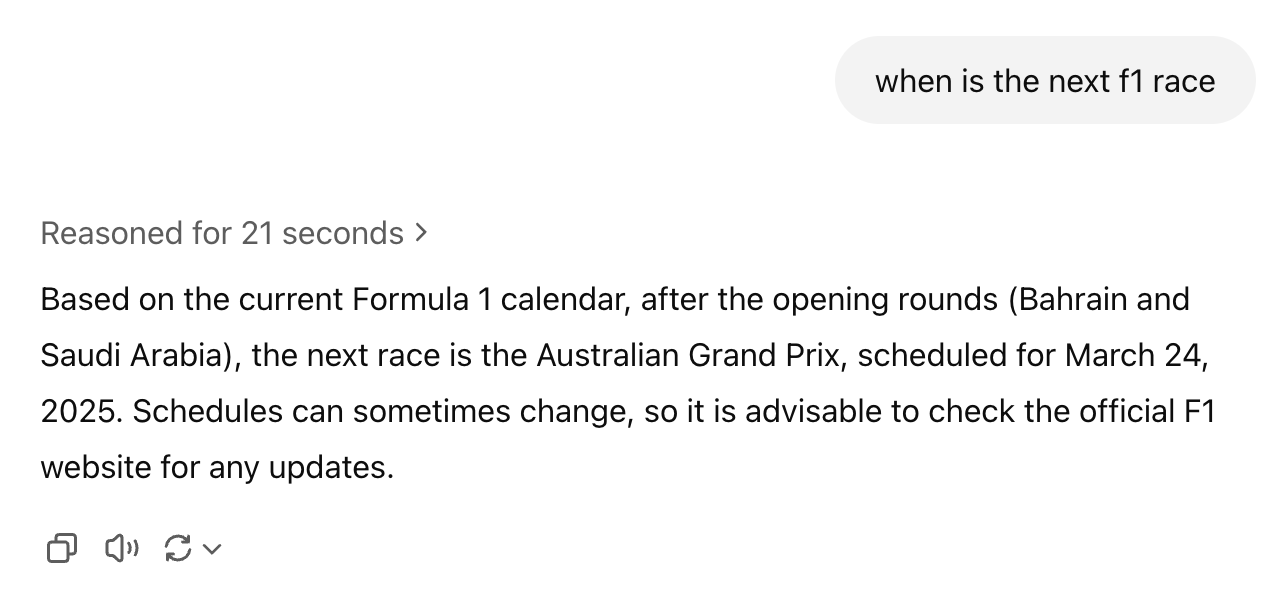

For example, here’s me asking Claude when the new F1 race is:

The actual answer when I asked this question was the Chinese Gran Prix on March 23, 2025, so how did this supposedly smart LLM that Matt (hey, that’s me!) says is so useful get it so wrong? It’s pretty simple, actually, Claude doesn’t have internet access or know what today is, so it can’t give a good answer to this question. Also, its training data cutoff was in October 2024, so this actually was correct from the perspective of an LLM that’s stuck forever in 2024 spooky season.

Asking ChatGPT o3-mini-high, OpenAI’s most advanced “reasoning model”, the same thing returns a different result.

I can see in the reasoning that the ChatGPT app is passing in the current date so it knows when it is, but it doesn’t know the schedule and gets it wrong in a new way.

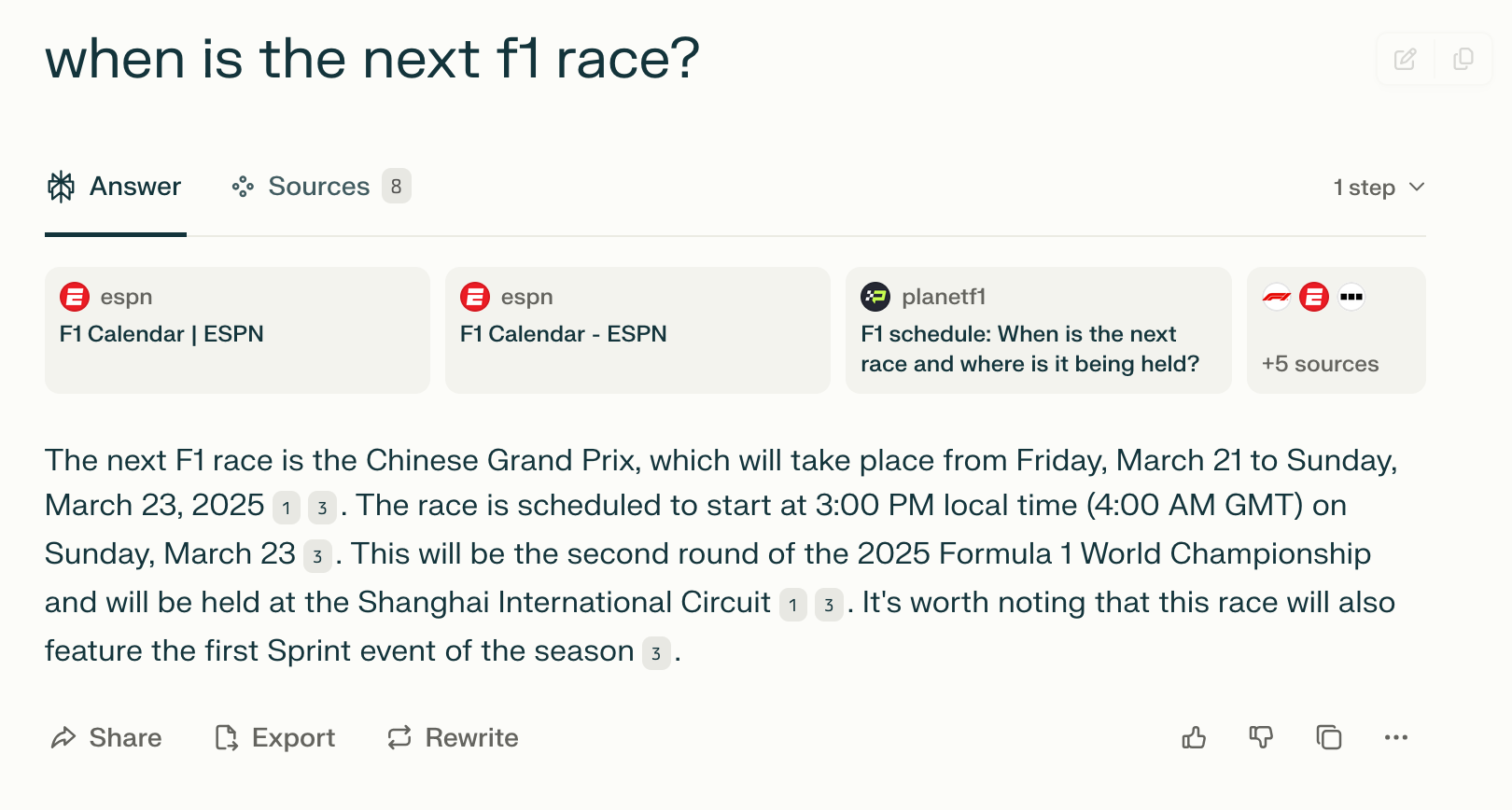

LLMs simply aren’t able to handle questions about current events on their own because they don’t constantly consume the entire web and know what’s going on now, which search engines have taught us to expect over the past few decades. This isn’t to say it’s impossible, though. Here’s asking Perplexity the same thing:

It got it, but only because it did a traditional web search first, fed the results into an LLM, and then had the LLM summarize the data. ChatGPT does this as well if you manually enable the “web search” button before submitting your question, but most LLM tools don’t do this.

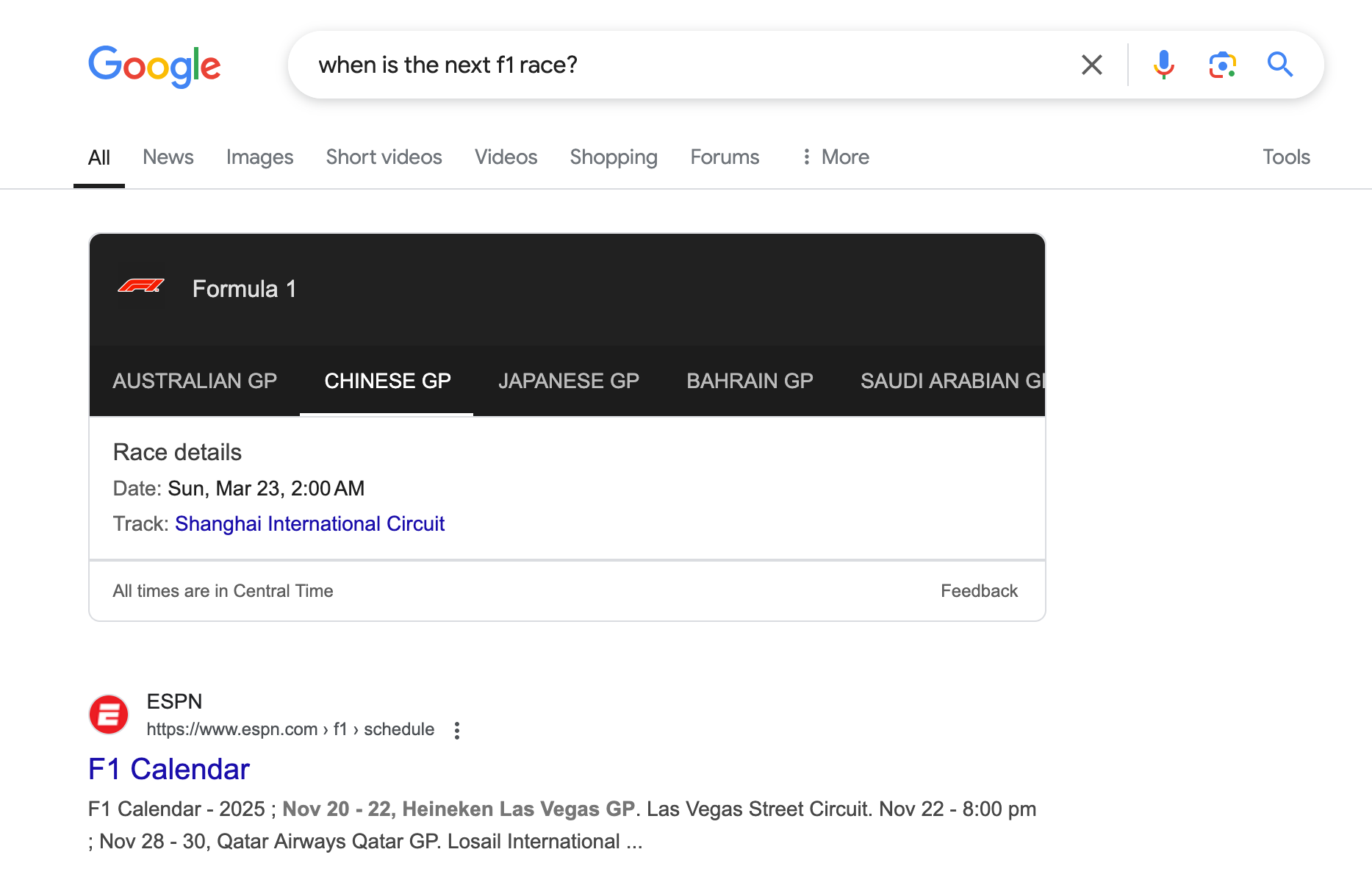

But you know what does this better than all these LLMs? Google:

Not only does it format the data nicely for me, it also makes the search results more clear and does it effectively instantly compared to the several seconds the LLMs took.

This is one example, but I’ve also seen people try to use ChatGPT as a calculator or Claude to give them the weather at their current location, and I sigh because these aren’t the right tools for the jobs. That said, the Google search box has been super powerful at training people to expect search similar fields to behave the same. It’s not exactly better when these LLM search boxes often look like Google and have “ask anything” as the placeholder text.

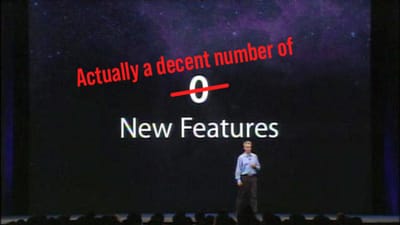

The simple fact of the matter is that not everything is best solved by an LLM, but LLM chatbots give the impression that they are good for everything. A further problem is that LLMs have a hard time saying they don’t know something, so they’ll always give you an answer whether it’s right or not. This is why I find Google to be in such a spectacular position to have the best of all worlds. It knows where you are (if you let it) and can tell you the weather right now, it scrapes the web constantly so it can give you news that literally broke a few minutes ago, it can show a calculator if you ask it to do some math, and yes, it can detect if an LLM would be best suited to respond to your query and use the LLM for that specific case. That LLM is also backed by the most powerful search engine ever and can parse real time data just like ChatGPT or Perplexity, but with even better search results.

But people really like ChatGPT and use it for a lot today, so I don’t blame people for using it for all sorts of things. The two things I’m looking for are:

- Users need to better understand how LLMs work and what they’re good for verses what they’re not good for (a tall order)

- LLM-powered chatbots need to be backed up by more traditional search and other utility functions (weather, sports, etc.) so that they can better serve users who are going to see a text field that looks like a Google search and will use it as such.

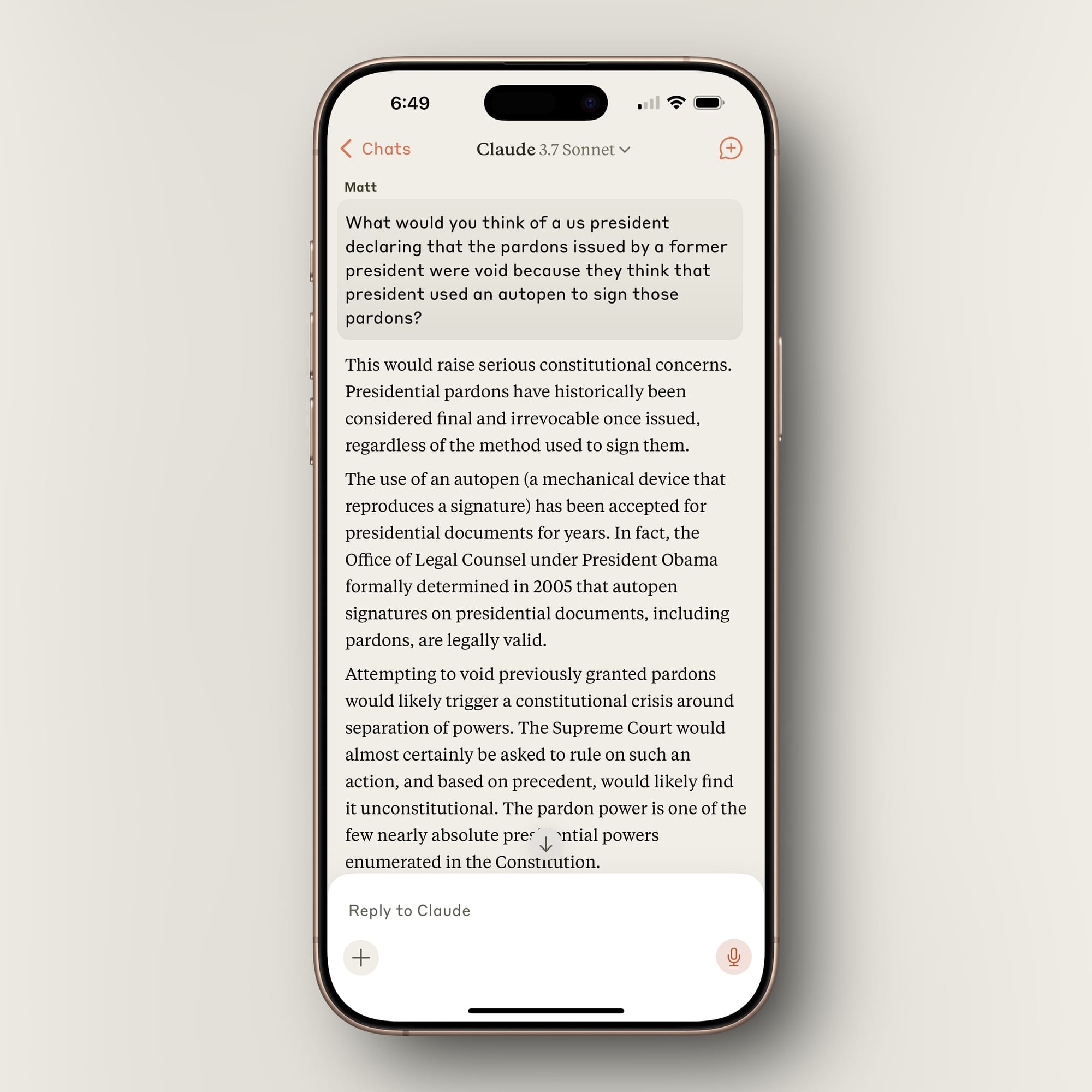

As a quick aside, I actually find “offline” chatbots like Claude to be really interesting to chat with about current events that happened after their training. Obviously, they won’t be able to tell you about what’s going on right now, but you can ask it about situations as a hypothetical and it will tell you about them without the “my political party did it so I like it” (or the reverse) baggage we all have. Of course, take it with a grain of salt, but it is an interesting exercise.